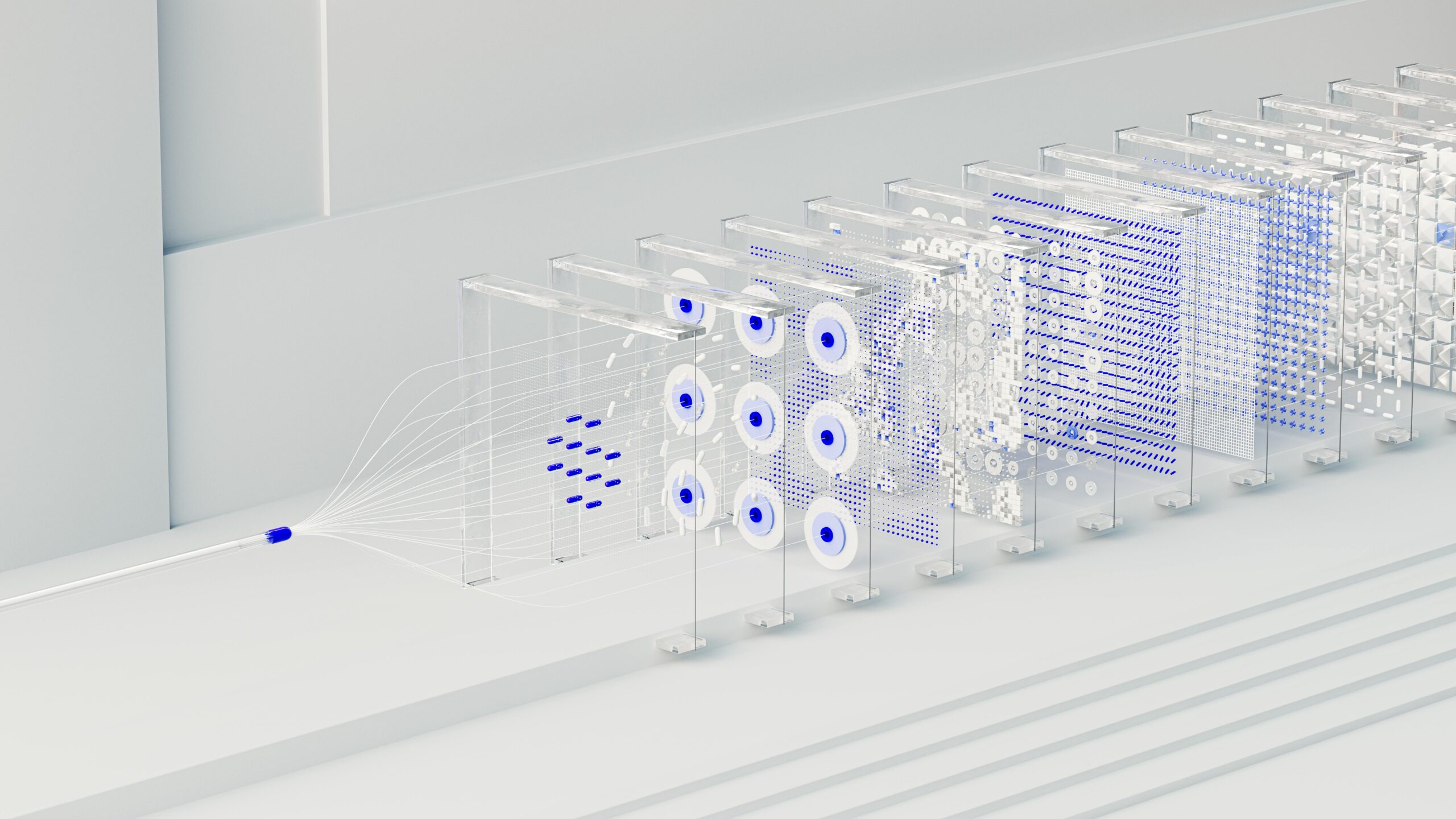

The fusion of semi-supervised learning with sparse-label indexing represents a paradigm shift in how modern machine learning systems process and categorize vast amounts of unlabeled data efficiently.

🚀 The Dawn of Intelligent Data Processing

In today’s data-driven landscape, organizations face an overwhelming challenge: mountains of unlabeled data that hold tremendous potential value, yet require enormous resources to manually annotate. Traditional supervised learning approaches demand extensive labeled datasets, a requirement that often becomes a bottleneck in developing robust machine learning models. This is where semi-supervised learning emerges as a game-changing solution, particularly when combined with sparse-label indexing techniques.

The concept of sparse-label indexing addresses a fundamental problem in information retrieval and machine learning: how to efficiently organize and access data when only a small fraction of examples have been labeled. By leveraging semi-supervised learning frameworks, we can unlock the hidden patterns within unlabeled data, creating powerful indexing systems that learn from both labeled and unlabeled examples simultaneously.

Understanding the Foundation: What Makes Semi-Supervised Learning Special

Semi-supervised learning occupies a unique space between supervised and unsupervised learning paradigms. While supervised learning relies entirely on labeled data and unsupervised learning works exclusively with unlabeled data, semi-supervised approaches intelligently combine both types. This hybrid methodology is particularly valuable when labeling data is expensive, time-consuming, or requires specialized expertise.

The underlying assumption of semi-supervised learning is that the structure of unlabeled data contains valuable information about the underlying distribution. By exploiting this structure, algorithms can make better predictions than they would using labeled data alone. This principle becomes especially powerful when applied to sparse-label scenarios, where labels are scarce but unlabeled data is abundant.

The Mathematics Behind the Magic ✨

At its core, semi-supervised learning leverages several key assumptions. The smoothness assumption suggests that points close to each other in the feature space are likely to share the same label. The cluster assumption proposes that data naturally forms discrete clusters, with points in the same cluster sharing labels. The manifold assumption posits that high-dimensional data lies on a lower-dimensional manifold, and this structure can guide learning.

These mathematical principles enable semi-supervised algorithms to propagate label information from labeled to unlabeled examples, effectively expanding the training signal across the entire dataset. When applied to indexing, this creates a rich semantic structure that goes beyond simple keyword matching.

Sparse-Label Indexing: Efficiency Meets Intelligence

Traditional indexing systems rely heavily on comprehensive labeling or manual curation. Search engines, recommendation systems, and content management platforms all require some form of structured organization. However, maintaining fully labeled indexes at scale becomes prohibitively expensive and often impossible as data volumes grow exponentially.

Sparse-label indexing acknowledges this reality by designing systems that function effectively with minimal labeled examples. Instead of requiring every document, image, or data point to be manually classified, these systems use strategic labeling of representative examples combined with intelligent propagation techniques to organize the entire corpus.

Key Components of Sparse-Label Indexing Systems

- Selective Labeling Strategies: Identifying which data points, when labeled, provide maximum information gain for the entire dataset

- Similarity Metrics: Sophisticated measures that determine relationships between labeled and unlabeled items

- Propagation Algorithms: Methods for spreading label information across the data manifold

- Confidence Scoring: Mechanisms to assess the reliability of inferred labels

- Dynamic Updating: Capabilities to refine the index as new data arrives or additional labels become available

Practical Applications Transforming Industries 🎯

The combination of semi-supervised learning and sparse-label indexing has found remarkable applications across diverse domains. In content moderation, platforms process billions of user-generated items daily, making comprehensive manual review impossible. By labeling a strategic subset and using semi-supervised techniques, these systems can identify problematic content at scale while continuously improving through feedback loops.

E-commerce platforms utilize these approaches to organize vast product catalogs. With millions of items, manually categorizing every product becomes impractical. Semi-supervised sparse-label indexing allows retailers to maintain structured catalogs by labeling representative products and inferring categories for the remainder based on descriptions, images, and metadata.

Medical Imaging: A Life-Saving Application

Healthcare represents one of the most impactful domains for this technology. Medical imaging datasets require expert radiologists to provide labels, making fully supervised approaches extremely costly. Semi-supervised learning with sparse labels enables diagnostic systems to learn from limited expert annotations while leveraging thousands of unlabeled scans, potentially identifying patterns that assist in early disease detection.

These systems don’t replace medical professionals but augment their capabilities, flagging suspicious cases for review and reducing the time spent on routine screenings. The efficiency gains translate directly into better patient outcomes through faster diagnosis and treatment initiation.

Technical Approaches: From Theory to Implementation

Several algorithmic families have proven particularly effective for semi-supervised sparse-label indexing. Graph-based methods construct similarity graphs where nodes represent data points and edges reflect relationships. Label propagation then flows through these graphs, with labeled nodes acting as sources that influence their neighbors. These approaches naturally capture the manifold structure of data and scale well to large datasets.

Self-training methods represent another powerful approach. These algorithms use an initial model trained on labeled data to predict labels for unlabeled examples. High-confidence predictions are then added to the training set, and the model retrains, iteratively expanding its knowledge. When combined with active learning strategies that select the most informative examples for labeling, self-training becomes remarkably efficient.

Deep Learning and Neural Architecture

Modern deep learning architectures have revolutionized semi-supervised learning capabilities. Techniques like pseudo-labeling, consistency regularization, and mixup augmentation enable neural networks to extract sophisticated representations from unlabeled data. Contrastive learning approaches, which learn by distinguishing similar and dissimilar examples, have shown particular promise for sparse-label scenarios.

Transformer architectures, initially designed for natural language processing, have proven remarkably effective for semi-supervised tasks across modalities. Their attention mechanisms naturally capture long-range dependencies and relationships, making them ideal for propagating sparse label information across complex data structures.

Overcoming Challenges and Pitfalls ⚠️

Despite their promise, semi-supervised sparse-label systems face several challenges. Confirmation bias represents a significant risk: if initial labels or model predictions are biased, these biases can amplify as they propagate through unlabeled data. Careful validation strategies and diversity-aware sampling help mitigate this issue.

Distribution mismatch between labeled and unlabeled data can severely degrade performance. If the labeled examples don’t adequately represent the full data distribution, the model may fail to generalize properly. Stratified sampling and domain adaptation techniques address this challenge by ensuring labeled data covers the spectrum of variations present in the full dataset.

Scalability Considerations

As datasets grow to billions of examples, computational efficiency becomes paramount. Approximate nearest neighbor algorithms, dimensionality reduction techniques, and hierarchical indexing structures enable semi-supervised systems to scale. Mini-batch processing and distributed computing frameworks allow these algorithms to process massive datasets without requiring prohibitive computational resources.

| Challenge | Impact | Solution Approach |

|---|---|---|

| Confirmation Bias | Error amplification | Ensemble methods, uncertainty quantification |

| Distribution Mismatch | Poor generalization | Stratified sampling, domain adaptation |

| Computational Cost | Limited scalability | Approximate algorithms, distributed processing |

| Label Noise | Degraded accuracy | Robust loss functions, outlier detection |

Best Practices for Implementation Success 💡

Successfully deploying semi-supervised sparse-label indexing systems requires careful attention to several factors. Begin with high-quality initial labels on strategically selected examples. The quality of these seed labels disproportionately impacts system performance, as they serve as the foundation from which all other inferences derive.

Implement robust validation frameworks that go beyond simple accuracy metrics. Monitor confidence distributions, analyze predictions on edge cases, and conduct regular audits to identify systematic errors. Cross-validation with held-out labeled data provides crucial feedback on model generalization.

Iterative Refinement Strategies

Deploy these systems iteratively rather than attempting perfection from the start. Begin with a modest scope, validate performance, and gradually expand coverage. Active learning loops that identify high-value examples for human labeling create a virtuous cycle of continuous improvement. Human-in-the-loop workflows ensure that domain expertise guides system development while maintaining efficiency.

Documentation and explainability features build trust and facilitate debugging. When the system makes surprising predictions, stakeholders need to understand the reasoning. Attention visualizations, nearest neighbor examples, and confidence scores help humans verify that the system is learning meaningful patterns rather than spurious correlations.

The Future Landscape: What Lies Ahead 🔮

The field of semi-supervised learning for sparse-label indexing continues to evolve rapidly. Few-shot learning techniques that enable models to learn from just a handful of examples represent an exciting frontier. Meta-learning approaches that learn how to learn efficiently promise to reduce the data requirements even further.

Multimodal learning, which combines information from different data types like text, images, and audio, opens new possibilities for sparse-label scenarios. Labels in one modality can inform understanding in another, effectively increasing the amount of supervision available to the system.

Ethical Considerations and Responsible AI

As these systems become more powerful and widely deployed, ethical considerations grow increasingly important. Fairness across demographic groups, transparency in decision-making, and accountability for errors demand careful attention. Semi-supervised systems must be designed with fairness constraints that prevent discriminatory patterns from emerging as labels propagate.

Privacy-preserving techniques like federated learning enable semi-supervised training on distributed, sensitive data without centralizing information. Differential privacy mechanisms protect individual data points while still allowing aggregate pattern learning. These approaches will become essential as regulations around data usage continue to tighten.

Maximizing Value: Strategic Implementation Roadmap

Organizations looking to harness semi-supervised learning for sparse-label indexing should begin by identifying high-value use cases where labeling is expensive but unlabeled data is abundant. Content classification, product categorization, and document retrieval represent prime candidates. Assess current labeling costs and project potential savings from reduced manual effort.

Build or acquire the necessary infrastructure, including computing resources for training and inference, data storage for large unlabeled corpora, and annotation tools for efficient labeling of strategic examples. Consider cloud-based solutions that offer scalability without large upfront investments.

Building Cross-Functional Teams

Success requires collaboration between data scientists who understand the algorithms, domain experts who provide quality labels and validation, and engineers who build robust production systems. Foster communication channels that enable rapid iteration and feedback. Establish clear metrics that align technical performance with business objectives.

Invest in training and upskilling to build internal expertise. While external consultants can accelerate initial deployment, long-term success depends on internal teams who understand both the technology and its application within your specific context. Create knowledge-sharing mechanisms to disseminate learnings across the organization.

Transforming Possibilities into Reality 🌟

Semi-supervised learning for efficient sparse-label indexing represents more than a technical advancement—it’s a fundamental shift in how we approach the challenge of organizing and extracting value from data. By acknowledging that comprehensive labeling is neither feasible nor necessary, we open pathways to solutions that were previously out of reach.

The unlimited potential referenced in our title isn’t hyperbole. When systems can learn effectively from limited supervision, they break free from the constraints that have historically limited machine learning deployments. Organizations can tackle previously intractable problems, process ever-growing data volumes, and deliver intelligent experiences at scale.

The journey from traditional fully supervised systems to semi-supervised sparse-label approaches requires investment in new techniques, infrastructure, and ways of thinking. However, the returns—in efficiency, scalability, and capabilities—far exceed the costs. As algorithms continue improving and best practices crystallize, barriers to adoption will continue falling.

Whether you’re building search engines, recommendation systems, content moderation platforms, or scientific analysis tools, semi-supervised sparse-label indexing offers a path forward. The question isn’t whether to adopt these approaches, but how quickly you can integrate them into your systems. Those who move decisively will gain significant competitive advantages through superior data organization, faster time-to-value, and reduced operational costs.

The convergence of abundant unlabeled data, sophisticated semi-supervised algorithms, and strategic sparse labeling creates unprecedented opportunities. By understanding the principles, mastering the techniques, and implementing thoughtfully, you can unlock the unlimited potential that lies hidden within your data, transforming it from an overwhelming challenge into your greatest strategic asset.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.