In today’s data-driven environment, achieving precision in indexing accuracy isn’t just a goal—it’s a necessity. Benchmarking annotator performance ensures quality outcomes.

🎯 Why Indexing Accuracy Matters in Modern Data Operations

Data annotation and indexing form the backbone of machine learning projects, content management systems, and information retrieval platforms. When multiple annotators work on the same dataset, consistency and accuracy become critical factors that directly impact the quality of your final product. Without proper benchmarking mechanisms, organizations risk investing resources into datasets that fail to deliver reliable results.

The challenge intensifies when dealing with large-scale projects involving dozens or even hundreds of annotators. Each individual brings their own interpretation, biases, and understanding to the task. This variability can introduce noise into your data, compromising model performance and decision-making processes downstream.

Organizations that implement robust benchmarking systems for indexing accuracy consistently outperform competitors in terms of data quality, operational efficiency, and return on investment. The difference between a well-calibrated annotation team and an unmonitored one can translate into millions of dollars in value over time.

Understanding the Fundamentals of Annotation Benchmarking

Benchmarking annotator performance begins with establishing clear baselines and success metrics. Before any comparative analysis can occur, teams must define what “accurate indexing” means within their specific context. This definition varies significantly across industries and use cases.

For a medical imaging project, accuracy might mean correctly identifying anatomical structures with 99% precision. For a content classification system, it could involve applying the right taxonomic labels with minimal disagreement among annotators. The context determines the standards, and those standards must be explicitly documented and communicated.

Once baseline metrics are established, organizations need systematic approaches to measure performance. This involves creating gold standard datasets—expertly annotated samples that serve as reference points for evaluating annotator output. These reference datasets become the yardstick against which all other work is measured.

Key Performance Indicators for Indexing Accuracy

Effective benchmarking relies on selecting the right KPIs. The most common metrics include inter-annotator agreement scores, precision and recall rates, F1 scores, and consistency measures over time. Each metric provides different insights into annotator performance and data quality.

Inter-annotator agreement, typically measured through Cohen’s Kappa or Fleiss’ Kappa, reveals how consistently different annotators classify the same items. High agreement indicates clear guidelines and well-trained annotators, while low agreement suggests ambiguity in instructions or inadequate training.

Precision measures the proportion of correctly identified positive instances among all items marked as positive. Recall captures the proportion of actual positive instances that were correctly identified. Together, these metrics provide a comprehensive view of both accuracy and completeness in indexing tasks.

Building Your Annotation Quality Framework 📊

A comprehensive quality framework starts with clearly documented annotation guidelines. These guidelines should eliminate ambiguity, provide extensive examples, and address edge cases that annotators will inevitably encounter. The more detailed and scenario-based these guidelines are, the more consistent your results will be.

Training programs must go beyond simple instruction manuals. Effective training incorporates hands-on practice with immediate feedback, regular calibration sessions, and ongoing education as guidelines evolve. New annotators should complete qualification tests before working on production data, ensuring they meet minimum competency standards.

Quality assurance processes need to be woven into daily workflows rather than treated as afterthoughts. This means implementing sampling strategies where a percentage of each annotator’s work is reviewed regularly. Automated systems can flag anomalies and outliers, directing human reviewers to potential problem areas.

Implementing Continuous Monitoring Systems

Real-time monitoring dashboards provide visibility into annotation quality as work progresses. These systems track individual and team performance metrics, highlighting trends and identifying annotators who may need additional support or training. Early detection of quality issues prevents problematic data from propagating through your systems.

Feedback loops are essential for continuous improvement. When quality issues are identified, annotators need specific, actionable feedback that helps them understand what went wrong and how to improve. Generic comments like “be more accurate” prove less effective than detailed explanations with concrete examples.

Periodic recalibration sessions bring annotators together to discuss challenging cases, clarify guidelines, and ensure everyone maintains aligned understanding. These sessions also provide opportunities for experienced annotators to mentor newer team members, fostering knowledge transfer and team cohesion.

Statistical Methods for Measuring Annotator Performance 📈

Statistical rigor separates effective benchmarking from guesswork. Beyond basic accuracy percentages, sophisticated statistical methods reveal deeper insights into annotator behavior and dataset quality. Understanding these methods empowers teams to make data-driven decisions about quality assurance processes.

Cohen’s Kappa coefficient measures agreement between two annotators while accounting for chance agreement. Values range from -1 to 1, with values above 0.8 generally indicating strong agreement. However, Kappa can be influenced by prevalence and bias, so it should be interpreted alongside other metrics.

Krippendorff’s Alpha provides a more robust alternative that handles multiple annotators, different data types, and missing data. This metric is particularly valuable for large-scale projects with varied annotation teams and complex classification schemes.

Calculating Inter-Rater Reliability

Inter-rater reliability assessment requires systematic sampling of multiply-annotated items. A common approach involves having each item annotated by at least two independent annotators, then calculating agreement metrics across the entire sample. The size and representativeness of this sample critically impact the reliability of your conclusions.

Confusion matrices offer valuable insights when working with classification tasks. These matrices reveal not just overall accuracy but specific patterns in annotator errors—which categories get confused with each other, whether certain annotators systematically misclassify particular items, and whether errors cluster around specific features or conditions.

Temporal analysis tracks how annotator performance changes over time. Learning curves help identify whether training programs are effective, how quickly new annotators reach proficiency, and whether performance degradation occurs due to fatigue or guideline drift. This longitudinal view informs decisions about workload management and refresher training schedules.

Technology Solutions for Streamlined Benchmarking

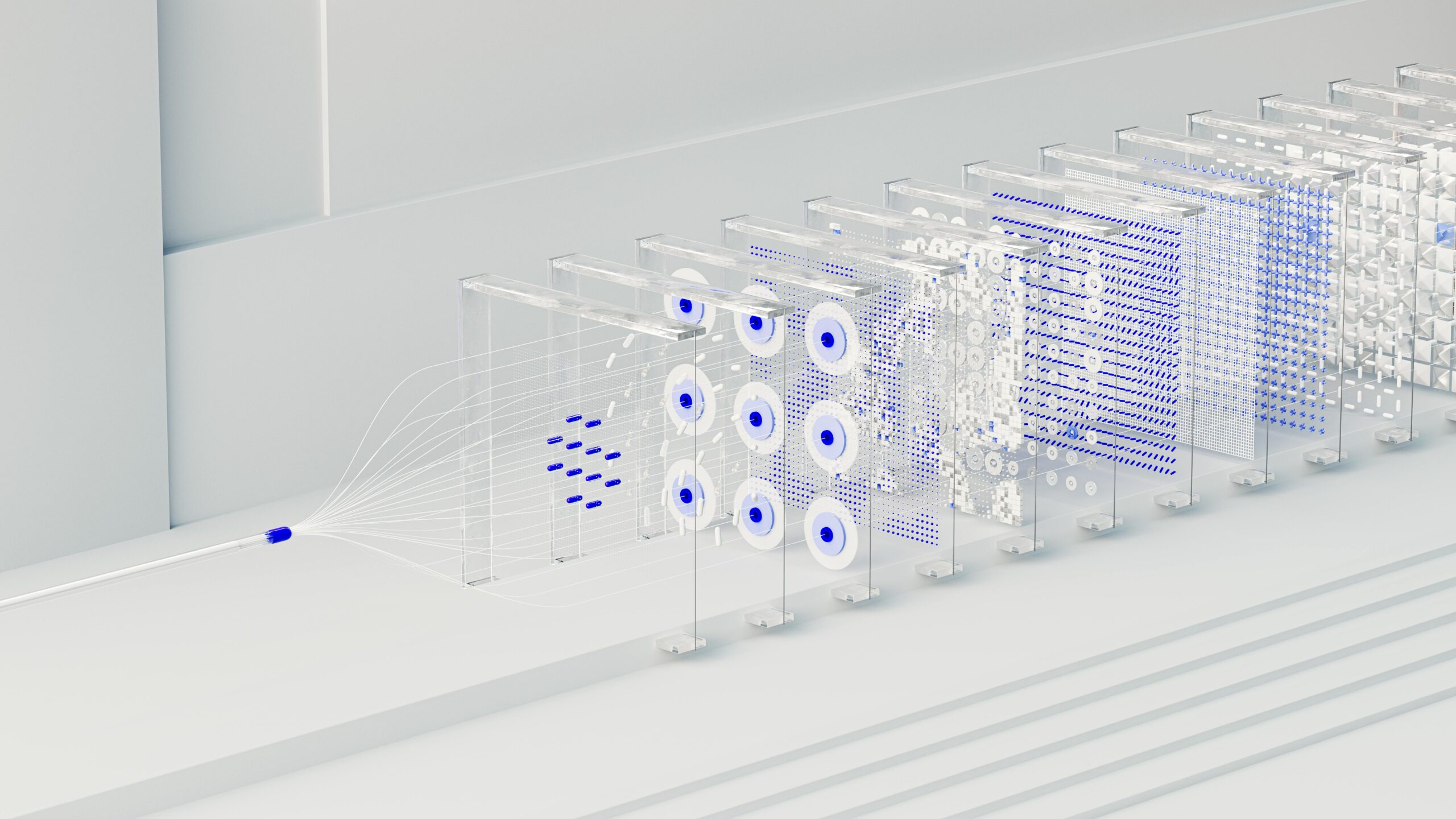

Modern annotation platforms incorporate built-in quality assurance features that automate many benchmarking tasks. These tools track annotator actions, calculate agreement metrics in real-time, and generate performance reports without manual intervention. Automation reduces overhead while increasing the frequency and comprehensiveness of quality checks.

Machine learning can augment human benchmarking efforts by identifying patterns that humans might miss. Anomaly detection algorithms flag unusual annotation patterns, suggesting either genuine edge cases or potential quality issues. Active learning systems prioritize which items should be multiply-annotated for quality assessment, optimizing resource allocation.

Integration with project management systems ensures quality metrics inform workflow decisions. When an annotator’s performance drops below threshold levels, automated systems can redirect their work to review queues, trigger training interventions, or adjust task assignments to match demonstrated competencies.

Selecting the Right Tools for Your Team

Tool selection depends on project scale, annotation complexity, and team structure. Small teams with straightforward classification tasks might thrive with lightweight solutions, while enterprise operations require comprehensive platforms with advanced analytics, role-based access controls, and extensive customization options.

Cloud-based platforms offer scalability and accessibility advantages, enabling distributed teams to collaborate seamlessly. However, sensitive data projects may require on-premise solutions that maintain tighter security controls. The trade-offs between flexibility, security, and functionality must align with organizational priorities and constraints.

Open-source annotation tools provide customization opportunities without licensing costs but require technical expertise to implement and maintain. Commercial platforms offer polished user experiences and vendor support but involve recurring costs. The total cost of ownership extends beyond licensing fees to include training, maintenance, and integration expenses.

Common Pitfalls in Annotation Benchmarking and How to Avoid Them ⚠️

Many organizations fall into the trap of measuring what’s easy rather than what’s important. Simply tracking annotation speed or volume tells you nothing about accuracy. Comprehensive benchmarking requires effort and resources, but cutting corners compromises data quality and undermines project success.

Insufficient sample sizes lead to unreliable conclusions about annotator performance. Statistical significance requires adequate data points—a handful of examples cannot reliably predict future performance or identify systematic issues. Quality assessment samples should be large enough to capture variability and detect patterns with confidence.

Failing to account for task difficulty creates unfair comparisons between annotators. Someone assigned simple, unambiguous items will naturally achieve higher accuracy than someone tackling complex edge cases. Difficulty-adjusted metrics or stratified sampling approaches ensure fair evaluation across different task types.

Addressing Bias in Quality Assessment

Confirmation bias affects reviewers who unconsciously favor their own interpretations when assessing annotator work. Blinded review processes, where reviewers don’t know which annotator produced which work, help mitigate this issue. Rotating reviewers and using multiple independent reviews for critical assessments further reduces bias impact.

Guideline ambiguity masquerades as annotator error when instructions leave room for interpretation. Before attributing mistakes to annotator incompetence, teams should verify that guidelines unambiguously address the situation. Many apparent quality issues actually reflect documentation gaps rather than human failings.

Overemphasis on agreement metrics can discourage thoughtful annotation. When annotators know their work will be compared to others, they may gravitate toward “safe” choices rather than carefully considering each item’s unique characteristics. Balancing agreement metrics with accuracy against gold standards helps maintain appropriate incentives.

Optimizing Annotator Training Through Benchmarking Insights 🎓

Benchmarking data reveals specific knowledge gaps and skill deficiencies that training programs should address. Rather than generic training covering all topics superficially, targeted interventions focus on areas where specific annotators or the entire team demonstrate weakness. This personalized approach accelerates skill development and improves resource efficiency.

Performance trends identify when refresher training becomes necessary. Even experienced annotators experience guideline drift over time, gradually deviating from standards as memory fades or personal interpretations solidify. Scheduled recalibration prevents this drift before it significantly impacts data quality.

Peer learning opportunities emerge from benchmarking analysis. High-performing annotators can share strategies and insights with struggling colleagues. Case study discussions around challenging examples help teams develop shared mental models and consistent interpretation frameworks.

Creating a Culture of Quality Excellence

Sustainable quality requires more than processes and metrics—it demands organizational culture that values precision and continuous improvement. When annotators understand how their work contributes to larger objectives and see quality as a shared responsibility rather than top-down mandate, intrinsic motivation drives better outcomes than external monitoring alone.

Transparency about quality expectations and performance feedback builds trust and engagement. Annotators appreciate knowing where they stand, understanding evaluation criteria, and receiving support to improve. Punitive approaches that emphasize blame over growth foster anxiety and disengagement, ultimately degrading quality.

Recognition systems that celebrate accuracy achievements reinforce desired behaviors. Whether through public acknowledgment, performance bonuses, or career advancement opportunities, rewarding quality excellence signals organizational priorities and motivates sustained effort toward precision.

Maximizing ROI Through Precision Performance 💰

The business case for rigorous annotation benchmarking extends far beyond abstract quality concerns. High-quality training data directly impacts model performance, reducing the iterations and data volumes required to achieve target accuracy levels. This acceleration shortens development cycles and brings products to market faster.

Reducing rework costs represents another significant financial benefit. When annotations require extensive correction or complete redoing, project timelines extend and budgets inflate. Preventing quality issues through proactive benchmarking costs far less than fixing problems after the fact.

Reputation and competitive advantage stem from consistently delivering reliable results. Organizations known for data quality attract better clients, command premium pricing, and build long-term partnerships. The cumulative effect of precision performance compounds over time, creating sustainable business advantages.

Future Trends Shaping Annotation Quality Assurance 🚀

Artificial intelligence increasingly augments human annotation through pre-labeling and quality checking functions. These AI assistants handle routine cases while flagging complex situations for human attention. The human-AI collaboration model optimizes both efficiency and accuracy, with each contributor focusing on their strengths.

Blockchain technology promises immutable audit trails for annotation provenance and quality verification. Decentralized validation mechanisms could enable trustless quality assurance where multiple independent parties verify annotation accuracy without centralized oversight. These developments may reshape how distributed annotation teams operate and how data quality is guaranteed.

Augmented reality interfaces may transform how annotators interact with complex data types like 3D models or spatial information. Improved visualization tools reduce cognitive load and decrease errors, while haptic feedback and immersive environments enable more intuitive annotation of sophisticated datasets.

Implementing Your Benchmarking Strategy Today

Starting an annotation benchmarking program requires clear objectives, stakeholder buy-in, and realistic timelines. Begin with pilot programs on limited datasets, test different metrics and processes, and refine approaches based on initial learnings before scaling organization-wide. This iterative approach reduces risk while building internal expertise and confidence.

Documentation throughout implementation captures lessons learned and establishes institutional knowledge. Future teams benefit from understanding what worked, what didn’t, and why certain decisions were made. This knowledge base becomes increasingly valuable as programs mature and personnel change.

Success metrics for the benchmarking program itself ensure accountability and continuous improvement. Are quality issues being detected earlier? Has rework decreased? Do annotators report clearer understanding of expectations? Regular assessment of the quality assurance program mirrors the continuous monitoring applied to annotation work itself.

Organizations that invest in rigorous annotation benchmarking position themselves for success in an increasingly data-dependent world. The precision and performance gains achieved through systematic quality assurance compound over time, creating sustainable competitive advantages. While implementing comprehensive benchmarking requires upfront investment, the long-term returns in data quality, operational efficiency, and business outcomes far exceed the costs. The question isn’t whether to implement annotation benchmarking, but how quickly you can establish systems that maximize your team’s potential and ensure every indexed item contributes to excellence rather than undermining it.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.