The natural world speaks in a symphony of sounds, and technology now allows us to decode, catalog, and understand animal vocalizations like never before using accessible open-source solutions.

🎵 The Symphony Beneath Our Notice: Why Animal Call Indexing Matters

Every ecosystem on Earth pulses with acoustic information. From the haunting calls of whales in ocean depths to the intricate songs of tropical birds at dawn, animal vocalizations represent a treasure trove of biological data. These sounds tell stories of mating rituals, territorial disputes, predator warnings, and environmental health. For decades, researchers struggled with manual cataloging methods that were time-consuming, subjective, and limited in scope.

The revolution in bioacoustics has arrived through open-source software tools that democratize wildlife monitoring. Scientists, conservationists, and citizen researchers can now harness powerful algorithms to automatically detect, classify, and index animal calls from massive audio datasets. This transformation enables unprecedented insights into biodiversity, population dynamics, and ecosystem changes over time.

Open-source solutions have removed financial barriers that once restricted acoustic research to well-funded institutions. Community-driven development ensures these tools evolve rapidly, incorporating cutting-edge machine learning techniques while remaining accessible to users regardless of technical background or budget constraints.

🔧 Essential Open-Source Platforms Transforming Bioacoustics

The landscape of open-source bioacoustic tools has expanded dramatically in recent years. Several platforms have emerged as industry standards, each offering unique capabilities for processing and analyzing animal vocalizations.

Raven Pro and Raven Lite: The Cornell Lab Foundation

Developed by the Cornell Lab of Ornithology, Raven software represents one of the most comprehensive solutions for acoustic analysis. While Raven Pro requires a license, Raven Lite offers free access to core visualization and measurement tools. The software excels at creating spectrograms—visual representations of sound that reveal frequency patterns invisible to the naked ear.

Researchers use Raven to manually annotate calls, measure acoustic parameters like frequency ranges and duration, and extract quantitative data for statistical analysis. The platform supports batch processing, enabling efficient handling of hundreds of audio files simultaneously. Its robust annotation features make it invaluable for creating training datasets for machine learning models.

Audacity: The Swiss Army Knife of Audio Analysis

Though not designed specifically for bioacoustics, Audacity has become an indispensable tool in many researchers’ workflows. This completely free, cross-platform audio editor offers powerful filtering, noise reduction, and manipulation capabilities that prepare raw field recordings for analysis.

Audacity shines in preprocessing tasks—removing background noise, isolating frequency ranges, and standardizing recording levels across diverse datasets. Its extensive plugin architecture allows customization for specific research needs, and its intuitive interface makes it accessible to beginners while remaining powerful enough for professional applications.

Sonic Visualiser: Advanced Spectral Analysis

Sonic Visualiser brings sophisticated visualization and analysis capabilities to the bioacoustics community. Developed at Queen Mary University of London, this open-source application specializes in displaying audio data through multiple simultaneous views, enabling researchers to spot patterns and anomalies quickly.

The platform supports Vamp plugins—specialized audio analysis tools that can extract features, detect onsets, and perform pitch tracking. For animal call indexing, Sonic Visualiser’s annotation layers allow detailed marking of calls with metadata, creating structured datasets that feed into automated classification systems.

🤖 Machine Learning Meets Wildlife Monitoring

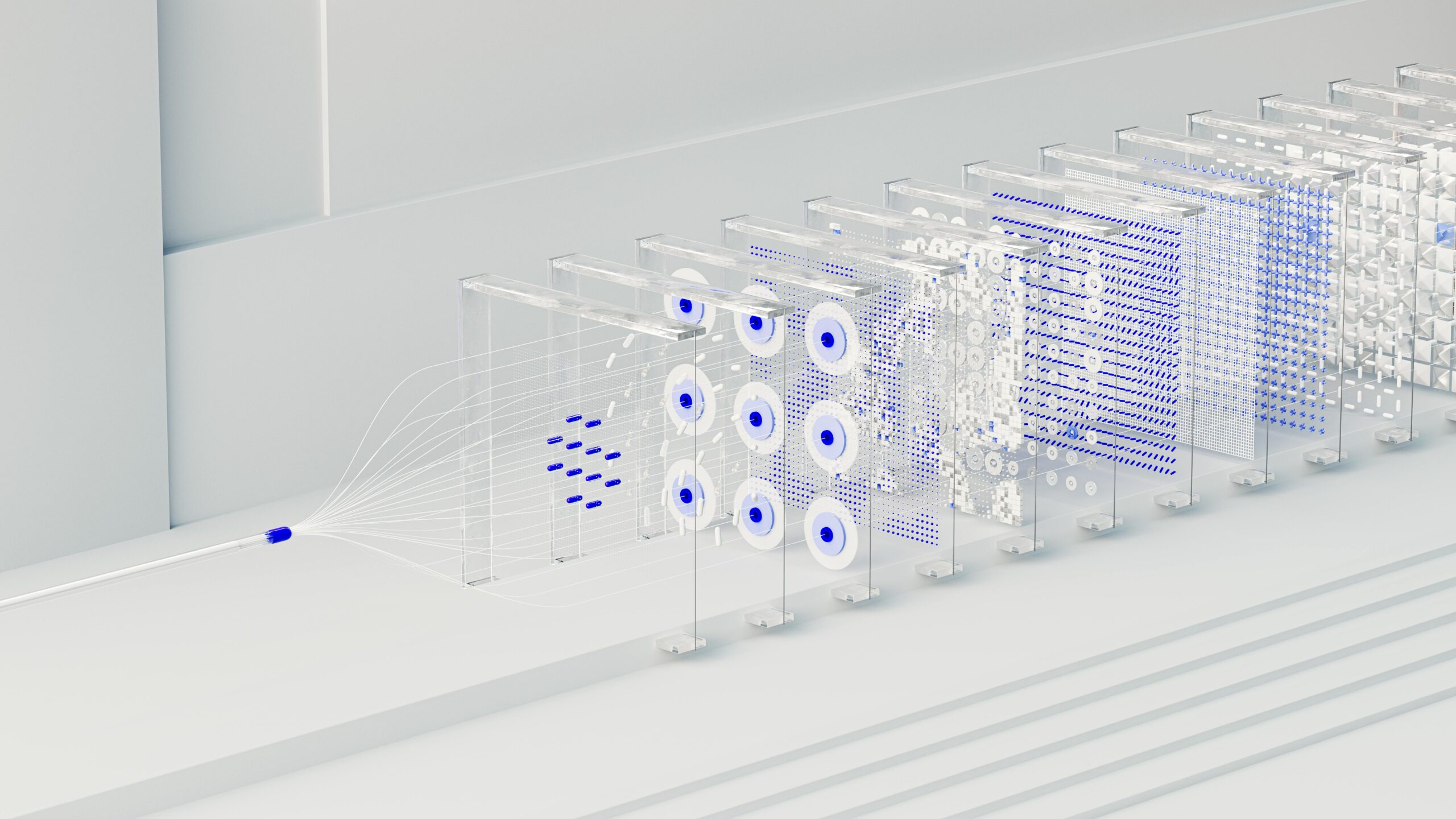

The true revolution in animal call indexing emerges from combining traditional acoustic analysis with modern machine learning algorithms. Open-source frameworks have made sophisticated pattern recognition accessible to the broader research community.

TensorFlow and Keras for Bioacoustic Classification

Google’s TensorFlow, along with the user-friendly Keras API, has become a cornerstone of automated animal call recognition. These deep learning frameworks enable researchers to build neural networks that learn to identify species-specific vocalizations from labeled training data.

Convolutional neural networks (CNNs) have proven particularly effective for spectrogram analysis, treating acoustic visualizations like images and detecting distinctive patterns that characterize different species’ calls. Transfer learning techniques allow researchers with limited datasets to leverage pre-trained models, adapting them to new species or environments with minimal additional training.

BirdNET: Specialized Avian Recognition

BirdNET represents a breakthrough in accessible automated bird sound identification. Developed collaboratively by the Cornell Lab of Ornithology and Chemnitz University of Technology, this deep learning model recognizes over 3,000 bird species from audio recordings.

The platform offers multiple interfaces—web applications, smartphone apps, and Python libraries—making it versatile for different use cases. Researchers can process field recordings through BirdNET to generate preliminary species lists, dramatically reducing the time required for manual identification. The system’s confidence scores help users assess reliability and flag uncertain detections for human verification.

Koogu and OpenSoundscape: Python-Powered Analysis

For researchers comfortable with programming, Python libraries like Koogu and OpenSoundscape provide flexible frameworks for building custom bioacoustic analysis pipelines. These tools handle the complexity of audio processing, feature extraction, and model training while allowing fine-tuned control over every step.

Koogu simplifies the creation of training datasets from annotated audio, implements data augmentation strategies to improve model robustness, and facilitates the training of custom classifiers for any species of interest. OpenSoundscape expands these capabilities with preprocessing utilities, visualization tools, and integration with computer vision techniques for spectrogram analysis.

📊 Building Effective Animal Call Databases

The value of animal call indexing extends beyond individual research projects when recordings and annotations become part of accessible databases. Open-source tools and platforms enable collaborative data sharing that accelerates discovery across the global research community.

Xeno-canto: Crowdsourcing Avian Acoustics

Xeno-canto stands as the world’s largest collection of bird sounds, built entirely through community contributions. This open-access platform hosts hundreds of thousands of recordings from virtually every corner of the globe, each accompanied by metadata about location, date, vocalization type, and recording quality.

Researchers leverage Xeno-canto recordings to train machine learning models, conduct comparative bioacoustic studies, and document temporal changes in bird populations. The platform’s API enables programmatic access, allowing automated downloading of targeted datasets for specific species or regions.

Macaulay Library: Comprehensive Natural History Archive

The Cornell Lab’s Macaulay Library extends beyond birds to encompass sounds from mammals, amphibians, and insects. This institutional repository maintains rigorous metadata standards and archival practices, ensuring long-term preservation and accessibility of acoustic biodiversity data.

The library’s integration with eBird and other citizen science platforms creates rich connections between acoustic data and observational records, enabling multidimensional analyses of species distributions and behaviors.

🌍 Real-World Applications Transforming Conservation

Open-source animal call indexing tools have moved from academic curiosity to essential conservation instruments. Field applications demonstrate tangible impacts on biodiversity monitoring and protection efforts worldwide.

Monitoring Endangered Species Populations

Acoustic monitoring offers non-invasive population assessment for elusive or endangered species. Researchers deploy autonomous recording units in remote habitats, collecting continuous audio data over weeks or months. Open-source analysis pipelines process these massive datasets, detecting target species calls and estimating abundance or distribution patterns.

Projects tracking endangered gibbons in Southeast Asian forests, right whales off North American coasts, and rare frogs in tropical rainforests all leverage open-source bioacoustic tools. The automated nature of these systems enables monitoring at scales impossible through traditional visual surveys.

Detecting Illegal Activities in Protected Areas

Conservation technology organizations deploy acoustic sensor networks that listen for indicators of poaching, illegal logging, or unauthorized vehicle access in protected wilderness areas. Machine learning models trained on chainsaw sounds, gunshots, or vehicle engines provide real-time alerts to rangers, enabling rapid response to threats.

These systems build upon open-source frameworks, adapting tools designed for animal call detection to recognize human activities. The dual-use nature of bioacoustic technology maximizes conservation impact by protecting both wildlife directly and their habitats from destructive exploitation.

Climate Change Impact Assessment

Long-term acoustic datasets reveal how animal communities respond to environmental changes. Phenological shifts—changes in the timing of seasonal behaviors like breeding or migration—become apparent through analysis of vocalization patterns across years.

Open-source indexing tools enable researchers to process decades of archived recordings, detecting subtle trends that indicate ecosystem stress or adaptation. These insights inform conservation strategies and help predict future impacts as climate conditions continue evolving.

🎓 Getting Started: Practical Steps for Aspiring Bioacoustic Researchers

The accessibility of open-source tools means anyone with curiosity and dedication can contribute to animal call indexing projects. Whether you’re a student, amateur naturalist, or career-changing professional, practical pathways exist to develop bioacoustic skills.

Building Foundational Knowledge

Begin by familiarizing yourself with basic acoustic concepts—frequency, amplitude, spectrograms, and how sound propagates through different environments. Numerous free online courses cover audio signal processing fundamentals, often requiring only high school mathematics as prerequisites.

Simultaneously, develop species identification skills for your region of interest. Learning to recognize animal calls by ear provides essential context that informs how you approach automated analysis. Mobile apps and online libraries offer excellent training resources with verified recordings and quiz features.

Hands-On Practice with Open Datasets

Download sample recordings from Xeno-canto or the Macaulay Library and experiment with visualization tools like Audacity or Sonic Visualiser. Practice creating spectrograms, measuring call parameters, and annotating vocalizations. This hands-on experience builds intuition about acoustic patterns and the challenges of automated detection.

Many open-source projects provide tutorial datasets and guided exercises. Working through these structured learning experiences accelerates skill development and exposes you to best practices established by experienced researchers.

Contributing to Citizen Science Projects

Platforms like Zooniverse host bioacoustic citizen science projects where volunteers help annotate recordings, providing valuable training data for machine learning models. These contributions directly support active research while teaching you to recognize subtle variations in animal vocalizations.

Consider establishing your own acoustic monitoring site using affordable recording equipment. Consistent sampling of a local area over time generates longitudinal data valuable for understanding seasonal patterns and long-term population trends.

⚡ Overcoming Common Challenges in Acoustic Analysis

While open-source tools have dramatically lowered barriers to bioacoustic research, practitioners still encounter obstacles that require creative solutions and perseverance.

Managing Massive Datasets

Continuous acoustic monitoring generates enormous data volumes—days of audio easily consume hundreds of gigabytes. Effective data management strategies become essential, including automated preprocessing pipelines that filter relevant segments and compress raw recordings.

Cloud computing platforms offer scalable processing power, though costs can accumulate quickly. Many researchers develop hybrid approaches, performing initial screening locally and using cloud resources only for computationally intensive tasks like deep learning model training.

Dealing with Environmental Noise

Field recordings inevitably capture wind, rain, insects, and anthropogenic sounds that complicate analysis. Sophisticated filtering techniques help, but they risk removing actual animal calls or introducing artifacts. Balancing noise reduction with signal preservation requires experimentation and validation.

Training machine learning models with augmented data—artificially adding noise to clean recordings—improves robustness to real-world conditions. This technique helps classifiers generalize better across diverse acoustic environments.

Addressing Species with Limited Training Data

While common species benefit from extensive recording collections, rare or understudied animals lack sufficient labeled data for traditional supervised learning approaches. Transfer learning, where models trained on related species are adapted, offers one solution. Semi-supervised and unsupervised techniques that cluster similar sounds without requiring extensive labels represent another promising direction.

🚀 The Future Landscape of Open-Source Bioacoustics

The trajectory of open-source animal call indexing points toward increasingly sophisticated, accessible, and impactful tools. Several emerging trends promise to reshape the field in coming years.

Real-time processing capabilities continue improving, enabling edge computing devices to run complex classification models in the field without internet connectivity. Solar-powered autonomous monitoring stations with onboard AI can operate indefinitely in remote locations, transmitting only detection summaries rather than raw audio.

Federated learning approaches allow collaborative model training across institutions without sharing sensitive location data about endangered species. This privacy-preserving technique enables researchers worldwide to contribute to improved classifiers while protecting vulnerable populations from potential exploitation.

Integration with other sensor modalities—cameras, weather stations, GPS tracking—creates comprehensive environmental monitoring systems where acoustic data gains context from complementary information streams. These multimodal approaches reveal complex ecological relationships invisible through single-sensor deployments.

🌟 Empowering Global Conservation Through Shared Knowledge

The open-source philosophy underlying modern bioacoustic tools reflects a fundamental belief that environmental challenges require collaborative, transparent, and inclusive solutions. By removing proprietary barriers and sharing knowledge freely, the global community accelerates progress toward understanding and protecting biodiversity.

Every researcher who releases an open-source tool, every citizen scientist who contributes annotations, and every institution that shares acoustic datasets multiplies the collective capacity to decode nature’s sonic tapestry. This democratization of technology ensures that conservation efforts can scale to match the magnitude of challenges facing wildlife worldwide.

Animal call indexing stands as more than a technical achievement—it represents humanity’s commitment to listening carefully to the natural world, understanding its complexities, and acting as responsible stewards of Earth’s irreplaceable biological heritage. Open-source tools provide the means; dedicated individuals provide the will.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.