Call indexing has revolutionized customer service and business intelligence, but noisy recordings remain a persistent challenge that undermines accuracy and efficiency.

🎯 Understanding the Noise Problem in Call Recording Systems

Every organization that relies on call indexing faces the same fundamental obstacle: background noise corrupts the audio quality that speech recognition engines depend on. Whether it’s the hum of an office environment, street traffic bleeding through mobile calls, or technical interference from outdated equipment, these unwanted sounds create significant barriers to effective call analysis.

The impact extends far beyond simple inconvenience. Poor audio quality directly affects transcription accuracy, sentiment analysis reliability, and ultimately, the business insights extracted from customer conversations. When call indexing systems struggle with noisy recordings, compliance monitoring becomes unreliable, quality assurance suffers, and valuable customer intelligence slips through the cracks.

Modern contact centers process thousands of calls daily, and even a small percentage of unusable recordings translates into substantial lost opportunities. Understanding the nature of these noise challenges is the first step toward implementing effective solutions that preserve the integrity of your call indexing infrastructure.

🔊 Types of Noise That Plague Call Recording Quality

Not all noise is created equal, and identifying the specific type affecting your recordings is crucial for selecting appropriate mitigation strategies. Environmental noise represents the most common category, encompassing everything from office chatter and keyboard clacking to air conditioning systems and traffic sounds.

Electromagnetic Interference and Technical Noise

Technical noise originates from the recording equipment itself or the transmission infrastructure. This includes electrical hum, radio frequency interference, codec artifacts, and signal compression distortion. These issues often manifest as consistent patterns throughout recordings, making them particularly troublesome for automated indexing systems that rely on clean audio signals.

Network-related noise affects VoIP calls specifically, introducing packet loss, jitter, and latency that degrade audio quality. As businesses increasingly adopt cloud-based communication platforms, understanding and addressing these digital artifacts becomes essential for maintaining call indexing effectiveness.

Human-Generated Acoustic Interference

Beyond environmental and technical sources, human behavior contributes significantly to recording quality issues. Agents working in open-plan offices, customers calling from crowded locations, or simultaneous conversations in shared spaces create complex acoustic environments that challenge even sophisticated noise reduction algorithms.

Breathing sounds, paper rustling, eating or drinking during calls, and sudden loud events like door slams or alerts all add layers of interference that obscure the primary conversation. These transient noises require different handling approaches compared to constant background sounds.

🛠️ Advanced Technologies for Noise Suppression

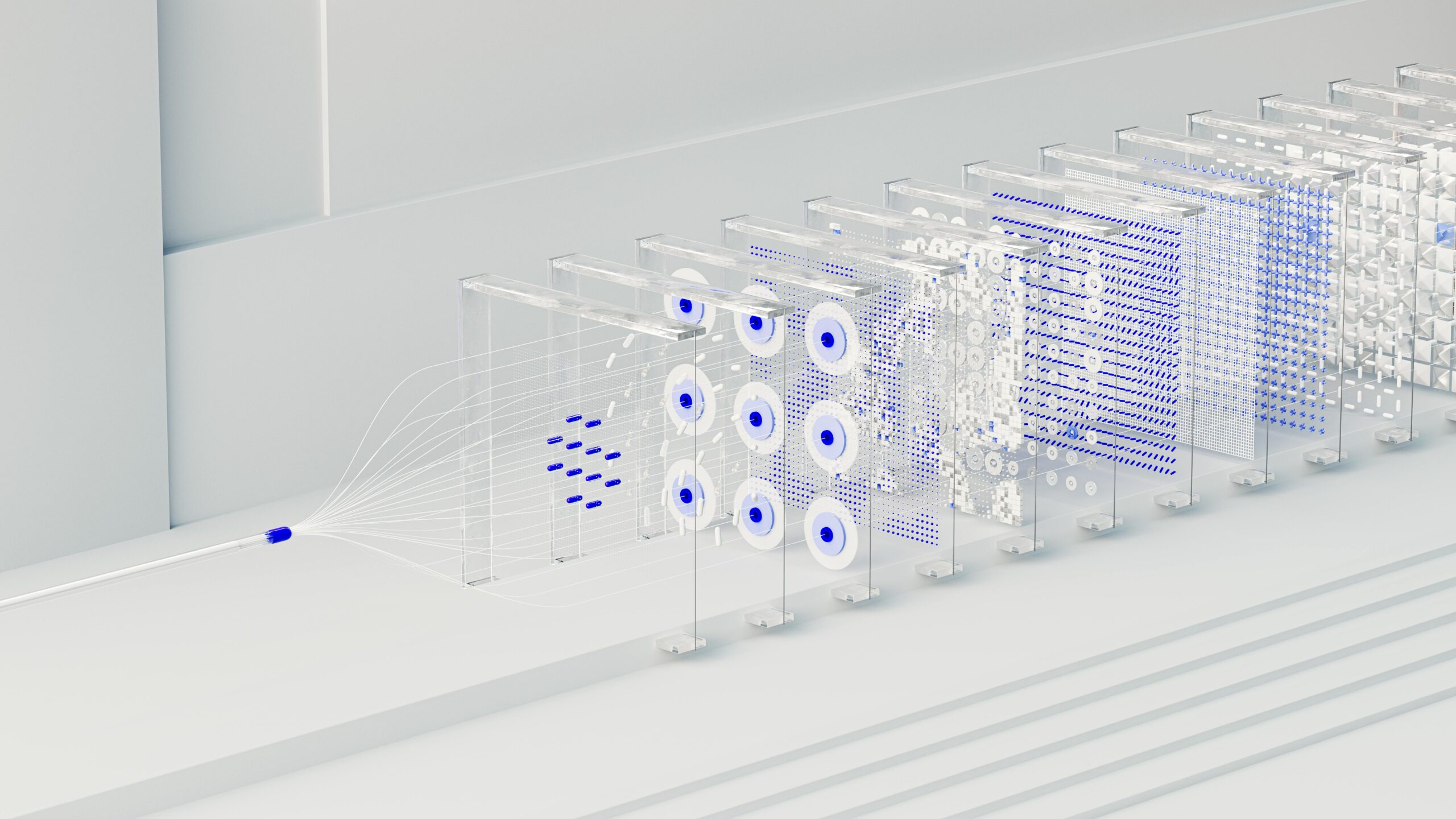

The evolution of artificial intelligence has transformed noise reduction from a basic filtering task into a sophisticated signal processing challenge. Modern solutions leverage machine learning algorithms trained on millions of audio samples to distinguish speech from non-speech elements with remarkable precision.

Deep neural networks excel at identifying speech patterns even in severely compromised recordings. These systems learn the spectral characteristics of human voice across different frequencies and can reconstruct degraded signals by predicting missing or corrupted information based on contextual audio data.

Real-Time vs. Post-Processing Approaches

Organizations must choose between real-time noise cancellation during call capture and post-processing enhancement applied to stored recordings. Real-time solutions prevent noise from ever entering the recorded file, preserving original conversation quality and reducing storage requirements for high-quality audio.

Post-processing offers flexibility for legacy recordings and allows for continuous improvement as algorithms advance. Many enterprises implement hybrid approaches, applying basic real-time filtering followed by more computationally intensive enhancement during the indexing phase.

| Approach | Advantages | Disadvantages |

|---|---|---|

| Real-Time Processing | Prevents noise storage, immediate quality improvement | Limited by processing power, cannot improve past recordings |

| Post-Processing | Works on existing files, uses advanced algorithms | Requires additional storage, increases indexing time |

| Hybrid Solution | Combines benefits of both approaches | More complex implementation, higher costs |

📊 Measuring and Monitoring Audio Quality Metrics

Effective noise management requires quantifiable metrics that objectively assess recording quality. Signal-to-noise ratio (SNR) provides the foundational measurement, expressing the proportion of desired speech signal to unwanted noise. Professional call indexing systems should target SNR values above 20 dB for reliable transcription accuracy.

Perceptual Evaluation of Speech Quality (PESQ) and Perceptual Objective Listening Quality Assessment (POLQA) offer more sophisticated metrics that correlate closely with human perception of audio quality. These standardized measurements enable consistent evaluation across different recording systems and time periods.

Establishing Quality Baselines and Alerts

Proactive monitoring prevents quality degradation from impacting business operations. Implementing automated quality assessment for every recorded call allows organizations to identify problematic equipment, environmental issues, or configuration problems before they affect large volumes of recordings.

Setting threshold-based alerts ensures technical teams receive immediate notification when audio quality falls below acceptable standards. These systems should track quality trends over time, identifying gradual degradation that might otherwise go unnoticed until significant damage occurs to indexing accuracy.

🎤 Optimizing Recording Infrastructure for Clean Audio

The best noise reduction strategy begins with prevention through proper infrastructure design. Hardware selection significantly impacts baseline recording quality, with professional-grade equipment featuring superior analog-to-digital converters, better shielding against electromagnetic interference, and higher sampling rates that preserve speech fidelity.

Headset quality directly affects both sides of customer conversations. Noise-canceling microphones with directional pickup patterns focus on the agent’s voice while minimizing environmental sounds. Investing in quality headsets delivers immediate improvements that no amount of post-processing can replicate.

Network Configuration for VoIP Call Quality

Voice over IP introduces unique quality challenges that require specific network optimizations. Quality of Service (QoS) configurations prioritize voice traffic over other network data, reducing packet loss and latency that degrade audio quality. Proper bandwidth allocation ensures sufficient capacity even during peak usage periods.

Codec selection balances audio quality against bandwidth consumption. While highly compressed codecs reduce network load, they introduce artifacts that complicate noise reduction efforts. Organizations serious about call indexing accuracy should favor codecs like G.722 or Opus that preserve more audio information.

🤖 Implementing AI-Powered Speech Enhancement

Artificial intelligence has revolutionized the possibility of salvaging severely compromised recordings. Modern speech enhancement algorithms use neural networks trained on paired datasets of clean and noisy audio to learn sophisticated noise patterns and removal strategies that traditional filtering cannot achieve.

Recurrent neural networks (RNNs) and convolutional neural networks (CNNs) process audio spectrograms to identify and isolate speech components from noise. These systems adapt to different noise types without manual tuning, making them particularly valuable for contact centers dealing with diverse recording conditions.

Training Custom Models for Your Environment

While pre-trained models offer excellent general-purpose performance, organizations with specific acoustic environments benefit from custom model training. Collecting representative samples of your actual recording conditions allows AI systems to specialize in the exact noise patterns your business encounters.

This approach proves especially valuable for industries with unique acoustic signatures, such as manufacturing facilities with specific machinery noise or healthcare environments with medical equipment interference. The investment in custom training delivers measurably better indexing accuracy compared to generic solutions.

🔍 Preprocessing Strategies Before Indexing

Strategic preprocessing transforms marginal recordings into indexable assets. Audio normalization equalizes volume levels across recordings, ensuring consistent signal strength that speech recognition engines expect. This proves particularly important when calls originate from diverse sources with varying recording levels.

Spectral gating selectively removes frequency ranges dominated by noise while preserving speech frequencies. This technique works exceptionally well for constant background noise like HVAC systems or electrical hum, removing these elements without affecting conversation intelligibility.

Adaptive Filtering Techniques

Unlike static filters that apply the same processing to all recordings, adaptive filters analyze each file’s unique characteristics and adjust their behavior accordingly. Wiener filtering examines the statistical properties of both signal and noise, calculating optimal filter coefficients that minimize distortion while maximizing noise reduction.

Kalman filtering provides another sophisticated approach, particularly effective for time-varying noise conditions where background sounds change throughout a conversation. These adaptive techniques require more computational resources but deliver superior results for challenging recordings that would otherwise fail indexing quality thresholds.

💡 Practical Workflow Integration Strategies

Technology alone cannot solve the noisy recording challenge without thoughtful integration into existing workflows. Establishing tiered processing workflows that route recordings through different enhancement pipelines based on initial quality assessments optimizes resource utilization while ensuring adequate processing for problematic files.

High-quality recordings proceed directly to indexing, while moderate-quality files receive standard noise reduction, and severely compromised recordings undergo intensive enhancement or manual review. This approach balances processing costs against business requirements, preventing unnecessary computation on already-acceptable audio.

Quality Feedback Loops for Continuous Improvement

Implementing feedback mechanisms allows indexing accuracy to improve over time. When transcription confidence scores fall below thresholds, flagging these recordings for manual review and correction creates training data that refines both noise reduction and speech recognition models.

Tracking which specific noise types cause the most indexing failures guides targeted infrastructure improvements. If outdoor mobile calls consistently underperform, for example, organizations might implement agent protocols encouraging customers to move to quieter locations or invest in better mobile-optimized codecs.

🌟 Future Trends in Clean Audio Capture

Emerging technologies promise even more effective solutions for the noise challenge. Spatial audio processing using beamforming techniques focuses recording on specific directions, essentially creating virtual directional microphones through multi-element arrays. This technology, already common in smart speakers, is gradually entering professional communication systems.

Generative AI models represent another frontier, capable of not just removing noise but actually reconstructing missing or corrupted speech segments based on linguistic context and voice characteristics. These systems analyze conversation flow and speaker patterns to predict and regenerate damaged audio portions with remarkable accuracy.

Edge Computing for Distributed Processing

Processing noise reduction at the network edge rather than centralized servers reduces latency and bandwidth requirements while improving privacy by keeping audio data local. Edge AI accelerators enable sophisticated neural network inference on recording devices themselves, delivering real-time enhancement without cloud dependency.

This distributed architecture scales more efficiently than centralized approaches, particularly for organizations with multiple locations or remote workforces. As edge computing hardware becomes more capable and affordable, expect wider adoption of this processing model.

🚀 Building a Comprehensive Noise Management Program

Sustainable success requires treating noise management as an ongoing program rather than a one-time project. Regular equipment audits identify aging components that degrade audio quality before they significantly impact operations. Scheduled acoustic assessments of physical environments catch problems like new noise sources from facility changes or nearby construction.

Employee training ensures agents understand their role in recording quality. Simple practices like proper headset positioning, minimizing paper handling during calls, and being aware of environmental noise contribute meaningfully to baseline audio quality that technical solutions build upon.

Documentation of noise sources, mitigation strategies, and effectiveness measurements creates institutional knowledge that prevents recurring problems. When staff changes or systems evolve, this documentation ensures continuity of quality standards and accelerates troubleshooting when issues arise.

✨ Transforming Challenges Into Competitive Advantages

Organizations that master noisy recording management gain significant competitive advantages. Superior call indexing accuracy enables more effective quality monitoring, better compliance verification, and deeper customer insights that drive business decisions. These capabilities translate directly into improved customer satisfaction, reduced regulatory risk, and enhanced operational efficiency.

The investment in noise reduction technology and processes pays dividends across multiple business functions. Sales teams gain access to better market intelligence from customer conversations, training programs improve through clearer call examples, and dispute resolution becomes more reliable with high-quality recording evidence.

As voice interactions continue growing in business importance, the ability to extract reliable information from every conversation regardless of recording conditions separates industry leaders from competitors still struggling with basic transcription accuracy. The techniques and technologies discussed here provide the foundation for building that capability within your organization.

By approaching noise management systematically with proper infrastructure, advanced processing technologies, and continuous improvement processes, businesses transform what was once a frustrating limitation into a robust capability that enhances decision-making and customer understanding across the entire organization.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.