Deploying sensors without a robust data pipeline is like building a highway without exit ramps—data flows, but nowhere to go efficiently.

Modern sensor networks generate massive volumes of real-time data across industries from manufacturing to smart cities. The challenge isn’t just collecting this information—it’s ensuring every byte travels seamlessly from source to actionable insight. Advanced data pipelines have become the critical infrastructure that separates successful sensor deployments from expensive failures that overwhelm systems and frustrate stakeholders.

Organizations investing millions in sensor hardware often overlook the architectural backbone that makes these investments worthwhile. Without proper data flow management, even the most sophisticated sensors become isolated islands of information, unable to contribute to broader intelligence systems. This article explores how strategic pipeline design transforms sensor deployments from data-generating expenses into value-creating assets.

🔧 Understanding the Anatomy of Sensor Data Pipelines

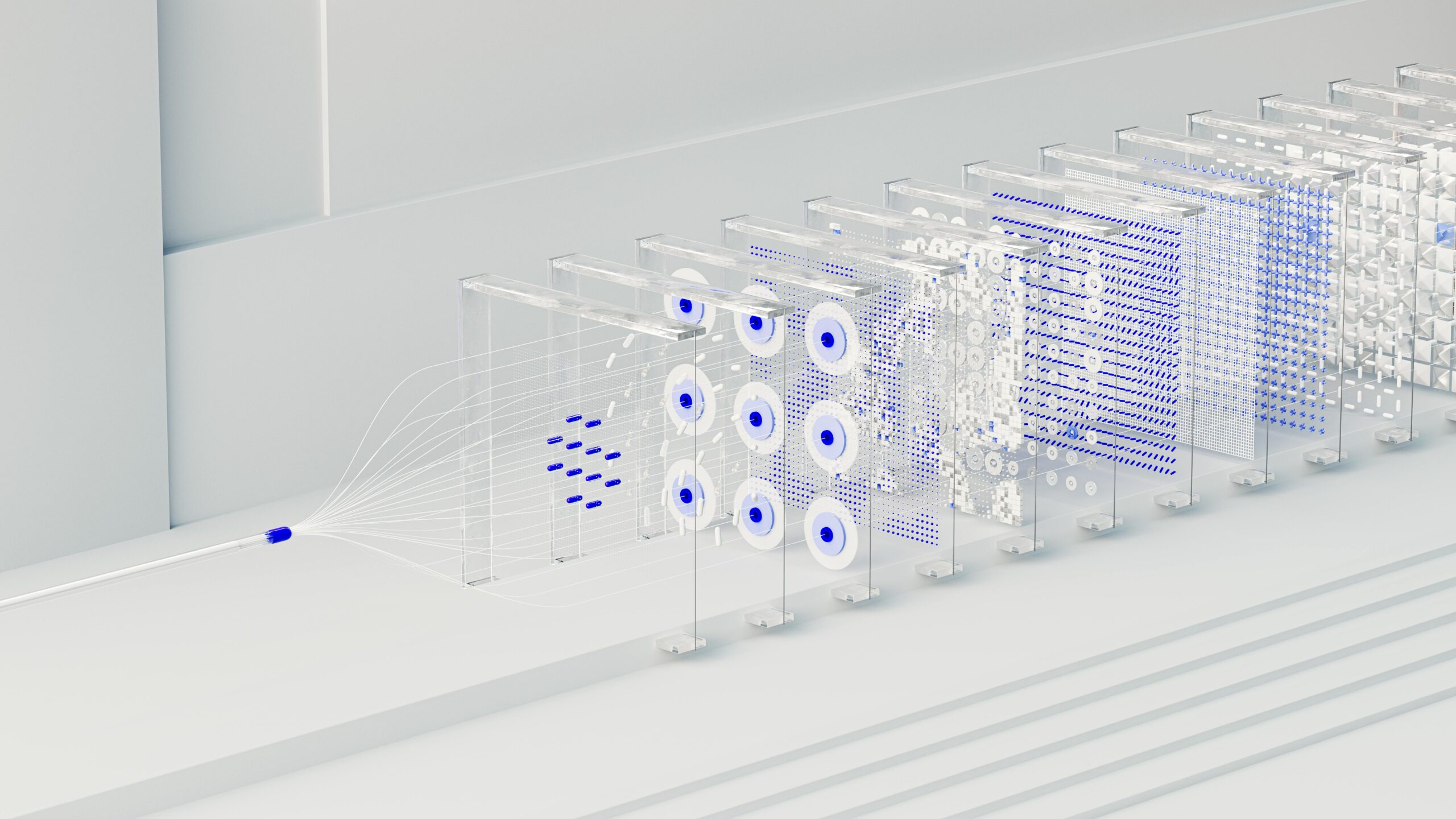

A sensor data pipeline encompasses the entire journey of information from physical measurement to business decision. This journey involves multiple stages, each requiring careful engineering to maintain data integrity, minimize latency, and scale appropriately as sensor networks expand.

The ingestion layer serves as the first critical checkpoint where raw sensor signals enter the digital ecosystem. This stage must handle diverse protocols—MQTT, CoAP, HTTP, and proprietary formats—while managing connection instability common in remote deployments. Edge computing capabilities at this stage can dramatically reduce bandwidth requirements by preprocessing data before transmission.

Following ingestion, the transformation layer cleanses, normalizes, and enriches sensor data. Temperature readings from Celsius to Fahrenheit conversions, timestamp synchronization across time zones, and validation against expected ranges all occur here. This stage prevents corrupted or anomalous data from contaminating downstream analytics.

The storage architecture determines how quickly historical data can be retrieved and analyzed. Time-series databases optimized for sensor data offer compression ratios and query speeds impossible with traditional relational databases. Choosing between hot, warm, and cold storage tiers based on access patterns directly impacts both performance and operational costs.

📊 Scaling Challenges in High-Volume Sensor Environments

When sensor deployments grow from dozens to thousands of devices, data volumes can increase exponentially. A single industrial facility might generate terabytes of sensor data daily, creating bottlenecks that traditional architectures cannot accommodate without significant re-engineering.

Horizontal scaling strategies become essential as vertical scaling reaches physical limits. Distributed stream processing frameworks like Apache Kafka and Apache Pulsar enable parallel processing across multiple nodes, maintaining sub-second latency even as data volumes multiply. These systems provide the fault tolerance necessary for mission-critical sensor applications where data loss is unacceptable.

Network bandwidth limitations frequently constrain sensor deployment success, particularly in geographically dispersed installations. Edge processing reduces transmission requirements by performing local aggregation and filtering. A temperature sensor array might transmit only statistical summaries and anomalies rather than continuous raw readings, cutting bandwidth needs by 90% or more.

Handling Data Velocity and Variety

Different sensor types generate data at wildly different rates. Vibration sensors in predictive maintenance applications may sample thousands of times per second, while environmental sensors might report hourly. Pipelines must accommodate this velocity variance without creating resource contention between high and low-frequency streams.

Data variety presents equal challenges as different sensors produce structured readings, unstructured logs, binary blobs, and video feeds. A unified pipeline architecture must provide appropriate handling for each data type while maintaining consistent monitoring and management interfaces. Schema registries and data contracts help maintain order as sensor diversity increases.

⚡ Real-Time Processing for Immediate Action

Many sensor applications demand millisecond-level response times. Fire detection systems, autonomous vehicle sensors, and industrial safety monitors cannot wait for batch processing cycles. Stream processing engines enable continuous computation on data in motion, triggering alerts and automated responses before data even reaches permanent storage.

Complex event processing identifies patterns across multiple sensor streams simultaneously. A smart building system might correlate occupancy sensors, HVAC temperatures, and air quality monitors to optimize environmental conditions automatically. These multi-stream correlations require sophisticated windowing and stateful processing capabilities beyond simple threshold monitoring.

Machine learning models deployed directly within data pipelines enable predictive capabilities at the edge. Anomaly detection algorithms learn normal sensor behavior patterns and flag deviations immediately, often before human operators recognize problems. This embedded intelligence transforms reactive monitoring into proactive maintenance strategies.

🔐 Security and Compliance in Sensor Data Flows

Sensor data often contains sensitive information requiring protection throughout its lifecycle. Healthcare wearables, surveillance systems, and industrial control sensors generate data subject to regulatory requirements like HIPAA, GDPR, and sector-specific mandates. Pipeline architecture must implement security controls at every stage.

Encryption in transit protects data as it moves from sensors through networks to central systems. TLS/SSL protocols prevent interception, while certificate-based authentication ensures only authorized devices transmit data. Edge devices with limited computational resources require lightweight encryption schemes that maintain security without draining battery life.

Data governance frameworks track lineage from sensor source through every transformation to final storage. Audit trails documenting who accessed what data when become essential for compliance verification. Automated policy enforcement ensures sensitive data receives appropriate handling without relying on manual processes prone to human error.

Privacy-Preserving Pipeline Techniques

Differential privacy mechanisms allow valuable insights extraction while protecting individual privacy. Sensor data aggregation with added statistical noise prevents identification of specific individuals while maintaining overall pattern accuracy. These techniques enable smart city deployments without creating mass surveillance infrastructure.

Data minimization principles reduce risk by collecting only necessary information. Pipeline filters eliminate unnecessary data fields before storage, limiting exposure in potential breaches. Automated retention policies delete data once its usefulness expires, reducing both storage costs and compliance burden.

💡 Optimizing Pipeline Performance and Reliability

Performance optimization begins with understanding bottlenecks through comprehensive monitoring. Metrics tracking throughput, latency, error rates, and resource utilization reveal where pipelines struggle under load. Observability platforms designed for distributed systems provide the visibility necessary for informed optimization decisions.

Backpressure management prevents upstream data sources from overwhelming downstream processing capacity. When consumers cannot keep pace with producers, intelligent buffering and flow control mechanisms prevent data loss while signaling sources to reduce transmission rates. These feedback loops maintain system stability during usage spikes.

Redundancy and failover strategies ensure continuity when components fail. Replicated stream processors, multi-region storage, and automatic recovery procedures minimize downtime. Geographic distribution protects against localized failures while enabling lower latency for globally dispersed sensor networks.

Cost Management in Cloud-Based Pipelines

Cloud infrastructure offers unlimited scaling but requires careful cost management. Ingesting terabytes of sensor data monthly can generate substantial charges across network transfer, compute, and storage dimensions. Reserved capacity commitments, spot instances, and tiered storage policies dramatically reduce expenses without sacrificing capability.

Data compression techniques tailored to sensor characteristics achieve significant savings. Time-series compression algorithms exploit the temporal correlation inherent in sensor readings, often achieving 10x or better compression ratios. Custom encoding schemes for specific sensor types outperform generic compression approaches.

🌐 Integration with Broader Data Ecosystems

Sensor data rarely provides value in isolation. Integration with enterprise systems—ERP, CRM, business intelligence platforms—enables contextual analysis that transforms raw measurements into business insights. APIs and standardized data formats facilitate these connections without creating brittle point-to-point integrations.

Data lakes and warehouses provide centralized repositories where sensor data joins with transactional, operational, and external data sources. This consolidation enables analytics impossible when data remains siloed. However, careful schema design prevents data swamps where information becomes inaccessible due to poor organization.

Streaming data warehouses blur the line between real-time pipelines and analytical databases. These hybrid systems support both continuous queries on live data and historical analysis, eliminating the latency inherent in traditional ETL batch processes. Organizations gain both operational visibility and strategic insights from the same infrastructure.

🚀 Emerging Technologies Shaping Pipeline Evolution

Serverless computing architectures eliminate infrastructure management burden by automatically scaling pipeline components in response to load. Function-as-a-Service platforms execute data transformation logic without provisioning servers, reducing operational overhead while maintaining performance. Cost models charging per execution rather than continuous runtime align expenses with actual usage.

5G networks enable sensor deployments previously impossible due to connectivity constraints. Ultra-low latency and massive device connectivity support applications like autonomous vehicle coordination and augmented reality systems requiring millisecond response times. Pipeline architectures must evolve to exploit these enhanced network capabilities.

Quantum computing promises revolutionary advances in sensor data analysis, particularly for optimization problems involving thousands of variables. While still emerging, quantum algorithms could enable real-time optimization of complex systems like traffic networks or power grids at scales impossible for classical computers.

AI-Enhanced Pipeline Management

Artificial intelligence increasingly manages pipelines themselves, not just the data flowing through them. Machine learning models predict resource requirements, automatically scaling infrastructure before demand spikes occur. Anomaly detection identifies pipeline degradation before it causes failures, enabling proactive intervention.

AutoML platforms automatically train and deploy models on sensor data streams without data science expertise. These accessible tools democratize advanced analytics, allowing domain experts to extract insights without mastering complex statistical techniques. Pipeline-integrated AutoML continuously retrains models as sensor behavior evolves.

🎯 Best Practices for Implementation Success

Starting with pilot projects allows organizations to validate pipeline architectures before full-scale deployment. A limited sensor subset reveals integration challenges, performance characteristics, and skill gaps while limiting financial risk. Lessons learned inform production rollout strategies and prevent expensive mistakes.

Documentation and standardization prevent technical debt accumulation as pipelines grow more complex. Clear data contracts specify expected formats, update frequencies, and quality standards. Configuration management ensures reproducible deployments across development, testing, and production environments.

Cross-functional collaboration between sensor engineers, data engineers, and business stakeholders ensures pipelines deliver actual value. Regular feedback loops verify that collected data supports intended use cases. Iterative refinement based on real-world experience produces more effective systems than theoretical designs developed in isolation.

Monitoring and Continuous Improvement

Comprehensive monitoring extends beyond technical metrics to business KPIs measuring pipeline effectiveness. Are decisions based on sensor data producing expected outcomes? Do stakeholders actually use provided dashboards and alerts? These questions reveal whether technical success translates to business value.

Continuous improvement processes systematically address identified shortcomings. Regular retrospectives examine pipeline incidents and near-misses, identifying preventive measures. Performance benchmarking against industry standards reveals optimization opportunities often invisible from internal perspectives alone.

🔄 Future-Proofing Your Sensor Infrastructure

Technology evolution continues accelerating, making future-proofing essential for long-lived sensor deployments. Abstraction layers isolating business logic from infrastructure specifics enable technology swaps without application rewrites. Standard protocols and open-source components prevent vendor lock-in that could become costly constraints.

Modular architecture allows incremental upgrades rather than disruptive full replacements. Well-defined interfaces between pipeline components enable swapping implementations as better alternatives emerge. This flexibility extends system lifespan and protects initial investments as requirements evolve.

Building internal expertise ensures organizations can maintain and evolve pipelines independently. While external consultants accelerate initial implementation, knowledge transfer programs develop self-sufficiency. Documentation, training, and hands-on experience create teams capable of managing complex sensor infrastructures long-term.

The convergence of affordable sensors, ubiquitous connectivity, and powerful data processing capabilities creates unprecedented opportunities for data-driven decision making. Organizations that master advanced pipeline architectures position themselves to extract maximum value from sensor investments while maintaining the flexibility to adapt as technology and business requirements evolve. Success requires viewing pipelines not as technical plumbing but as strategic infrastructure deserving the same attention as the sensors themselves.

Efficient data flow transforms sensor deployments from isolated measurement devices into integrated intelligence networks. By implementing robust pipelines handling ingestion, processing, storage, and analysis at scale, organizations unlock insights impossible through manual data handling. The competitive advantage flows not from sensors alone but from the architectural sophistication that turns raw measurements into competitive intelligence driving better decisions faster than competitors can match.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.