In today’s data-driven landscape, organizations face a critical decision: balancing automatic and manual annotation methods to optimize workflows while maintaining quality and efficiency in their data labeling processes.

🔄 Understanding the Annotation Landscape in Modern Workflows

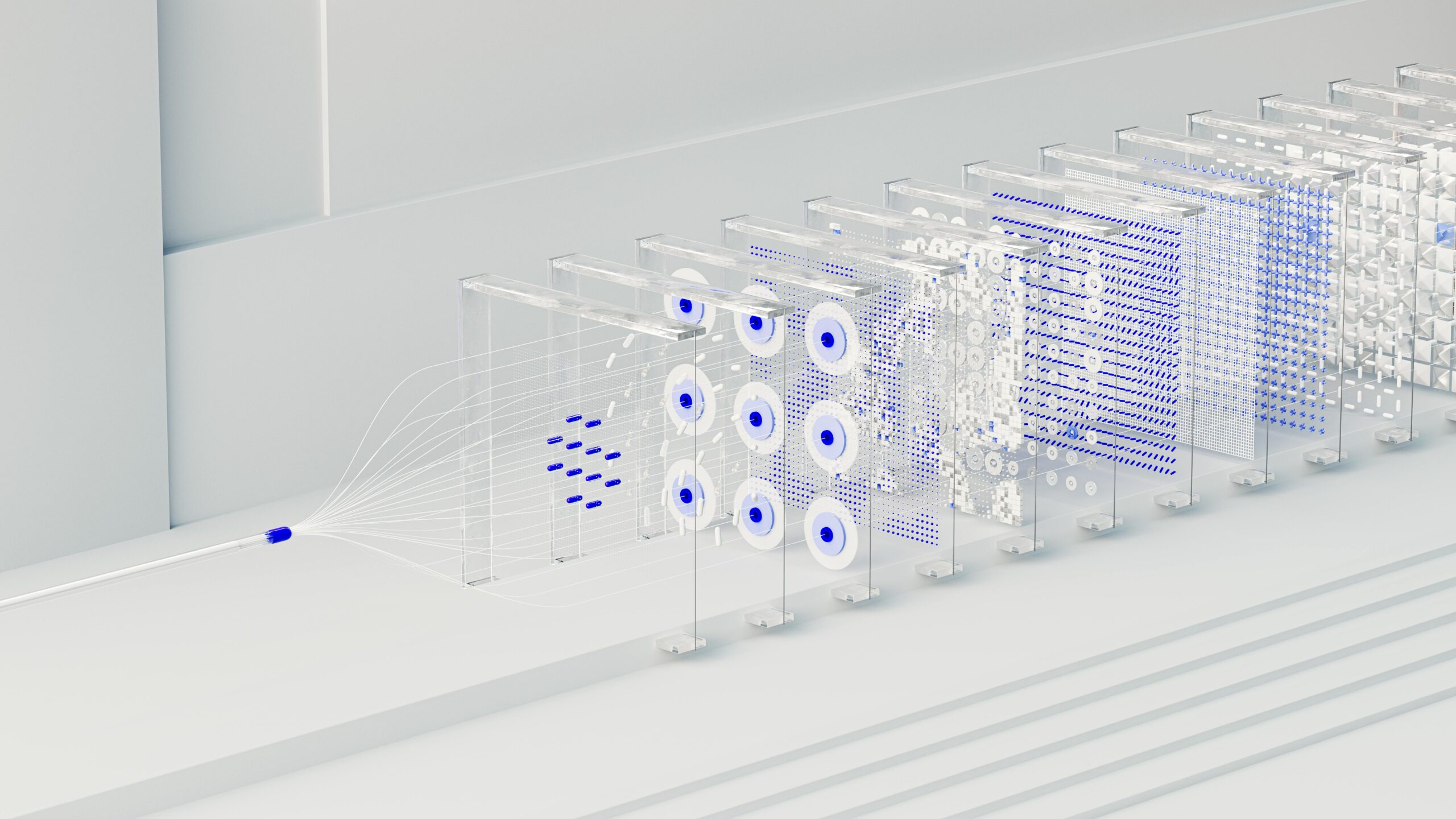

Data annotation has become the backbone of machine learning and artificial intelligence development. As businesses scale their AI initiatives, the challenge of processing vast amounts of data while maintaining accuracy has led to the emergence of hybrid annotation workflows. These systems combine the speed of automated tools with the nuanced judgment of human annotators, creating a balanced approach that addresses the limitations of either method alone.

The evolution of annotation strategies reflects the growing complexity of AI applications. From simple image classification to complex natural language processing tasks, the annotation requirements have diversified significantly. Organizations now recognize that a one-size-fits-all approach rarely delivers optimal results, prompting the adoption of more sophisticated hybrid methodologies.

⚡ The Power and Limitations of Automatic Annotation

Automatic annotation leverages machine learning algorithms and pre-trained models to label data without human intervention. This approach offers remarkable speed advantages, processing thousands of data points in minutes—a task that would require days or weeks for human annotators. The consistency of automated systems ensures uniform application of labeling rules, eliminating the variability that naturally occurs with human judgment.

However, automatic annotation faces significant challenges. Complex contextual understanding, subtle nuances in language or imagery, and edge cases often confuse automated systems. These tools excel at straightforward, repetitive tasks but struggle with ambiguity and scenarios requiring cultural context or domain-specific expertise. The accuracy of automatic annotation heavily depends on the quality of training data and the sophistication of underlying models.

Key Strengths of Automated Systems

- Processing speed that scales exponentially with computational resources

- Cost-effectiveness for large-volume, straightforward annotation tasks

- Consistent application of predefined rules and criteria

- Immediate availability without scheduling or coordination requirements

- Reduced human fatigue errors in repetitive labeling scenarios

Notable Weaknesses to Consider

- Limited contextual understanding beyond training parameters

- Difficulty handling novel situations not represented in training data

- Potential for systematic errors that propagate across large datasets

- Reduced flexibility when annotation requirements evolve

- Quality degradation with complex, subjective judgment tasks

👥 The Irreplaceable Value of Manual Annotation

Human annotators bring cognitive capabilities that remain unmatched by automated systems. The ability to understand context, recognize subtle patterns, and apply common sense reasoning makes manual annotation essential for complex tasks. Expert annotators provide domain-specific knowledge that enriches data quality, particularly in specialized fields like medical imaging, legal document analysis, or cultural content moderation.

Manual annotation ensures higher accuracy for nuanced tasks where context determines meaning. Human annotators can identify and flag ambiguous cases, provide explanatory notes, and adapt to evolving guidelines without requiring retraining. This flexibility proves invaluable in dynamic projects where annotation schemas undergo refinement based on emerging insights.

The quality assurance aspect of manual annotation cannot be overstated. Experienced annotators serve as the final arbiter of data quality, catching errors that automated systems miss and ensuring that labeled data meets the specific requirements of downstream applications. Their feedback often reveals systematic issues in data collection or annotation guidelines that improve overall workflow efficiency.

🔀 Architecting Effective Hybrid Annotation Workflows

The strategic integration of automatic and manual annotation creates workflows that harness the strengths of both approaches. Successful hybrid systems typically employ automatic annotation as a first-pass filter, rapidly processing large datasets and flagging items that require human review. This tiered approach maximizes throughput while maintaining quality standards.

Implementing confidence thresholds represents a critical design decision in hybrid workflows. Automatic annotations with high confidence scores proceed directly to the final dataset, while low-confidence predictions route to human annotators for verification. This selective human intervention focuses expertise where it delivers maximum value, optimizing both cost and accuracy.

Strategic Workflow Design Principles

Effective hybrid workflows incorporate continuous feedback loops where manual annotations improve automatic systems. Human corrections and additions serve as additional training data, progressively enhancing model performance. This iterative refinement creates a virtuous cycle where automation handles an increasing proportion of straightforward cases while human expertise addresses growing complexity.

Task complexity analysis should guide the allocation between automatic and manual annotation. Simple binary classifications with clear decision criteria suit automated processing, while tasks involving subjective judgment, cultural sensitivity, or specialized knowledge require human expertise. Mapping specific annotation types to appropriate methods prevents resource waste and quality compromises.

| Annotation Task Type | Recommended Primary Method | Secondary Support |

|---|---|---|

| Simple object detection | Automatic (85-95%) | Manual verification |

| Sentiment analysis | Hybrid (50-50%) | Context-dependent routing |

| Medical image diagnosis | Manual (primary) | Automatic pre-screening |

| Named entity recognition | Automatic (70-80%) | Manual edge case handling |

| Content moderation | Hybrid (40-60%) | Cultural context review |

📊 Measuring Success: Metrics That Matter

Optimizing hybrid workflows requires comprehensive performance measurement. Accuracy metrics remain fundamental, but efficiency optimization demands broader evaluation criteria. Throughput rates, cost per annotation, time-to-completion, and inter-annotator agreement all contribute to understanding workflow effectiveness.

Quality metrics should distinguish between different error types. False positives and false negatives carry different consequences depending on application context. In medical diagnosis applications, false negatives may prove more dangerous than false positives, influencing the confidence thresholds and human review triggers appropriate for the workflow.

Essential Performance Indicators

- Annotation accuracy rates across automatic and manual components

- Processing throughput measured in items per hour or day

- Cost efficiency calculated as expense per accurately labeled item

- Human review rate indicating automation effectiveness

- Error correction frequency revealing systematic issues

- Turnaround time from data ingestion to annotated output

💰 Economic Considerations in Hybrid Systems

The financial case for hybrid annotation workflows extends beyond simple cost comparison. While automatic annotation offers lower per-item costs, quality failures can prove expensive downstream. Poor training data quality results in underperforming models that require costly retraining or produce unreliable predictions in production environments.

Manual annotation involves higher direct costs but often delivers superior return on investment for critical applications. The key lies in strategic resource allocation—applying expensive human expertise where it generates maximum value while leveraging automation for tasks where speed and consistency outweigh subtle judgment requirements.

Scaling considerations significantly impact economic analysis. Automatic annotation systems incur upfront investment in infrastructure and model development but offer favorable economics at scale. Manual annotation scales linearly with volume, making it cost-prohibitive for massive datasets but economically sensible for smaller, high-value annotation projects.

🛠️ Technology Stack for Optimal Implementation

Building effective hybrid annotation workflows requires careful technology selection. Annotation platforms should support seamless integration of automated tools with human review interfaces. Quality management features, including task routing, confidence scoring, and annotation validation, form the foundation of efficient hybrid systems.

Machine learning frameworks that facilitate active learning strategies enhance hybrid workflow performance. These systems identify the most informative examples for human annotation, maximizing model improvement per human-labeled instance. This intelligent sampling approach reduces manual annotation volume while maintaining or improving model performance.

Data versioning and annotation tracking capabilities ensure reproducibility and enable quality audits. Comprehensive logging of annotation sources—whether automatic or manual—supports troubleshooting and continuous improvement initiatives. Integration with existing data infrastructure streamlines workflow implementation and reduces operational friction.

🎯 Industry-Specific Optimization Strategies

Healthcare applications demand extremely high accuracy, positioning manual annotation as primary with automated pre-processing support. Radiological image analysis benefits from automatic detection highlighting potential areas of concern, followed by expert physician review and final diagnosis. This approach combines efficiency with the precision essential for medical decision-making.

E-commerce product cataloging presents different optimization opportunities. Automatic annotation efficiently processes standard product attributes like color, size, and category, while manual review handles subjective descriptions, quality assessments, and nuanced categorization. This division leverages automation for objective facts while preserving human judgment for customer-facing content quality.

Autonomous vehicle development requires massive volumes of annotated sensor data. Hybrid workflows use automatic annotation for straightforward scenarios like highway driving with clear lane markings, reserving human expertise for complex urban environments, unusual weather conditions, and edge cases critical for safety-critical system training.

🚀 Future Trends Shaping Annotation Workflows

Advances in semi-supervised learning and few-shot learning are reducing the annotation volume required for model training. These techniques enable models to generalize from limited labeled examples, potentially shifting the balance toward greater automation while maintaining quality standards. The evolution continues toward more intelligent systems requiring less human intervention.

Explainable AI capabilities are enhancing automatic annotation reliability. When automated systems provide reasoning for their labeling decisions, human reviewers can more efficiently validate or correct annotations. This transparency bridges the gap between automatic speed and manual oversight, improving both efficiency and trust in hybrid systems.

Crowdsourcing platforms are evolving to better support hybrid workflows, offering sophisticated quality control mechanisms and task routing algorithms. These platforms enable organizations to scale human annotation capacity dynamically while maintaining quality through consensus mechanisms, expert review tiers, and automated quality checks.

🎓 Best Practices for Implementation Success

Starting with clear annotation guidelines proves essential for both automatic and manual components. Well-defined labeling criteria reduce ambiguity, improve inter-annotator agreement, and enable more accurate automatic annotation systems. Regular guideline updates based on edge cases and emerging patterns maintain workflow relevance as projects evolve.

Investing in annotator training yields significant returns in hybrid workflows. Even when automation handles the majority of simple cases, human annotators must understand the full annotation schema to effectively review and correct automatic predictions. Ongoing education about common automatic annotation errors improves review efficiency and quality.

Establishing feedback mechanisms between automatic and manual components creates continuous improvement. Tracking which types of automatic annotations require frequent human correction identifies areas for model enhancement. Similarly, analyzing manual annotation patterns reveals opportunities for expanding automatic coverage to new task categories.

⚖️ Finding Your Optimal Balance

The ideal ratio of automatic to manual annotation varies dramatically based on project requirements, data characteristics, and quality standards. Organizations should conduct pilot programs testing different hybrid configurations before committing to large-scale implementations. Empirical performance data from representative samples guides more informed decisions than theoretical analysis alone.

Regular reassessment of the automation-manual balance ensures workflows adapt to changing circumstances. As automatic systems improve through continuous learning, tasks previously requiring human review may transition to automated processing. Conversely, project scope evolution may introduce new complexity requiring increased manual involvement.

Successful hybrid workflows maintain flexibility, allowing dynamic adjustment of automation thresholds and review triggers based on real-time performance monitoring. This adaptive approach responds to quality fluctuations, workload variations, and deadline pressures while maintaining overall efficiency and accuracy targets.

The journey toward optimal annotation efficiency represents an ongoing process of refinement rather than a destination. Organizations that embrace hybrid workflows while continuously measuring, analyzing, and adjusting their approaches position themselves to maximize both the quality of their annotated data and the efficiency of their annotation operations. The synergy between automatic speed and manual insight creates annotation systems greater than the sum of their parts, delivering the reliable, high-quality data that powers successful AI initiatives.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.