Understanding animal communication through vocalizations is revolutionizing wildlife research, conservation efforts, and our connection with nature’s hidden conversations.

The challenge of deciphering what animals are saying has fascinated scientists for centuries. Today, we stand at the intersection of biology, technology, and artificial intelligence, where building labeled datasets for animal vocalizations has become essential for unlocking the secrets of the wild. This comprehensive guide explores the methodologies, challenges, and breakthroughs in creating these crucial databases that help us understand our planet’s diverse inhabitants.

🎵 The Symphony of Nature: Why Animal Vocalizations Matter

Every forest, ocean, and grassland echoes with complex acoustic communications that serve vital purposes. Animals use vocalizations for territorial defense, mating calls, warning signals, social bonding, and coordinating group activities. These sounds form intricate languages that researchers are only beginning to comprehend fully.

Labeled datasets for animal vocalizations serve as foundational tools for machine learning algorithms that can identify species, detect emotional states, predict behaviors, and even monitor ecosystem health. Without these carefully curated collections of recorded and annotated sounds, our ability to develop automated recognition systems would be severely limited.

The importance extends beyond academic curiosity. Conservation organizations use vocalization recognition to track endangered species populations, detect poaching activities, and assess habitat quality. Understanding animal communication also enhances wildlife management, reduces human-animal conflicts, and deepens our appreciation for biodiversity.

The Anatomy of a Quality Vocalization Dataset 🔬

Creating a valuable labeled dataset requires more than simply recording animal sounds. The foundation of any effective database includes several critical components that ensure its utility for research and machine learning applications.

High-quality audio recordings form the cornerstone. These must capture vocalizations with minimal background noise, sufficient sample rates, and appropriate bit depths. Professional-grade equipment typically includes directional microphones, windscreens, and recording devices capable of capturing frequencies beyond human hearing range.

Essential Metadata Components

Each recording in a labeled dataset must include comprehensive metadata that provides context and enables proper analysis. This information transforms raw audio files into scientifically valuable resources.

- Species identification with scientific nomenclature

- Date, time, and geographical coordinates of recording

- Environmental conditions (temperature, weather, habitat type)

- Behavioral context (alarm call, mating display, feeding vocalization)

- Individual identification when possible

- Recording equipment specifications

- Distance from sound source

- Presence of other species in the recording

The annotation process itself requires expertise. Identifying the precise temporal boundaries of each vocalization, labeling sound types, and noting acoustic features demands both technical knowledge and biological understanding. Experienced bioacousticians often spend hours analyzing minutes of audio.

From Field to Database: Collection Methodologies 📡

The journey from natural soundscapes to structured datasets involves systematic approaches that balance scientific rigor with practical constraints. Researchers employ various collection strategies depending on their specific objectives and target species.

Passive acoustic monitoring represents one of the most common approaches. Autonomous recording units deployed in natural habitats capture sound continuously or at scheduled intervals, often for weeks or months. This method minimizes human disturbance while gathering extensive data across different times and conditions.

Targeted recording expeditions allow researchers to focus on specific species or behaviors. Field biologists venture into habitats with portable equipment, responding to animal activity in real-time. This approach yields high-quality recordings with known behavioral contexts but requires significant time and expertise.

Citizen Science: Crowdsourcing Nature’s Sounds

The democratization of recording technology has opened new possibilities for dataset expansion. Smartphone applications and community platforms enable bird watchers, nature enthusiasts, and casual observers to contribute recordings with accompanying observations.

Projects like Xeno-canto, eBird, and iNaturalist have amassed millions of animal vocalizations through collective effort. While these crowdsourced recordings vary in quality and documentation completeness, they provide geographic and temporal coverage that professional researchers could never achieve alone.

Quality control becomes paramount when incorporating citizen science data. Verification systems involving expert review, automated quality filters, and community validation help ensure dataset reliability while maintaining the democratic spirit of participation.

The Labeling Challenge: Turning Sound into Knowledge 🏷️

Converting raw audio recordings into labeled training data presents significant technical and conceptual challenges. The labeling process requires decisions about granularity, classification schemes, and the handling of ambiguous vocalizations.

Researchers must determine appropriate taxonomic levels for labels. Should recordings be categorized only by species, or should they include subspecies, individual variations, and dialect differences? Should labels reflect call types, emotional states, or functional purposes? These decisions profoundly impact how the resulting dataset can be utilized.

Annotation Tools and Workflows

Specialized software facilitates the labeling process by providing visual representations of sound through spectrograms alongside audio playback capabilities. Popular tools include Raven Pro, Audacity, and web-based platforms designed specifically for bioacoustic annotation.

Efficient workflows typically involve multiple stages. Initial processing separates vocalizations from silence and noise. Subsequent passes identify species or sound types. Final refinement adds detailed metadata and verifies accuracy through cross-checking. Maintaining consistency across annotators requires clear protocols and regular calibration sessions.

Inter-rater reliability measures help quantify annotation consistency. When multiple experts label the same recordings, comparing their results reveals ambiguities in classification schemes and identifies sounds that challenge even experienced researchers.

🤖 Machine Learning Meets the Wild: Dataset Applications

Labeled vocalization datasets fuel artificial intelligence systems that can automatically recognize species, detect specific calls, and monitor wildlife populations at scales previously impossible. These applications transform conservation and research capabilities.

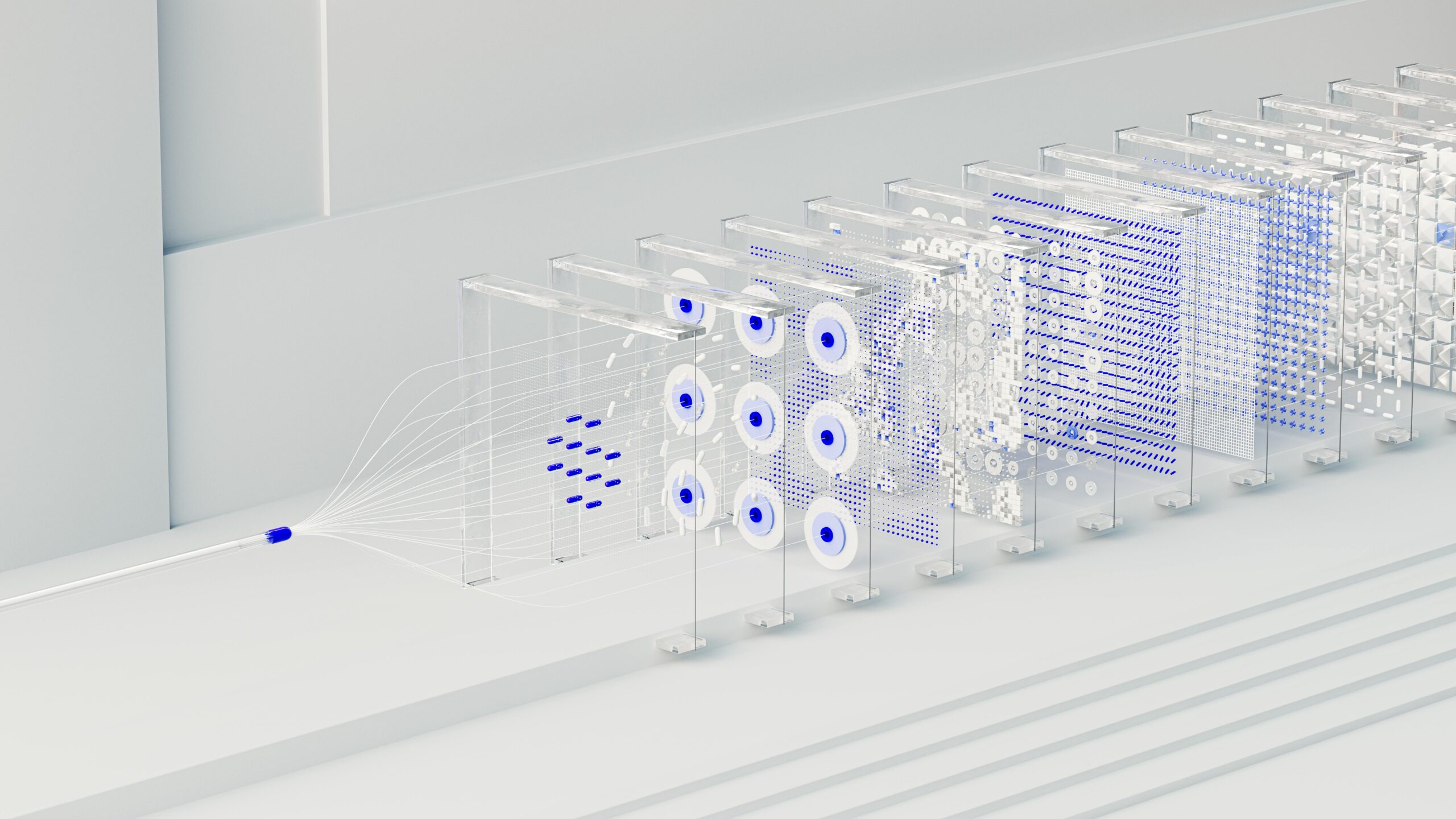

Supervised learning algorithms train on labeled examples, learning to distinguish between different species or call types based on acoustic features. Deep neural networks, particularly convolutional neural networks adapted for audio processing, have achieved remarkable accuracy in species identification tasks.

Real-world applications demonstrate the transformative potential. Automated monitoring systems deployed in protected areas can detect gunshots indicating poaching activity, identify endangered species presence, and track population trends through passive acoustic monitoring. These systems operate continuously without human fatigue, analyzing soundscapes in near real-time.

Addressing Data Imbalance and Bias

Most vocalization datasets suffer from significant imbalances. Common species with accessible habitats are overrepresented, while rare, elusive, or geographically restricted animals have minimal representation. This skew affects model performance and limits applicability.

Researchers employ various strategies to address these limitations. Data augmentation techniques artificially expand underrepresented classes by applying transformations like time-stretching, pitch-shifting, and adding realistic background noise. Transfer learning leverages models trained on abundant species to improve recognition of rare ones with limited data.

Geographic and temporal bias also requires attention. Datasets dominated by recordings from specific regions or seasons may not generalize well to other contexts. Deliberate efforts to capture diversity across habitats, weather conditions, and times of day improve model robustness.

Ethical Considerations in Wildlife Recording 🌍

Building vocalization datasets carries ethical responsibilities toward the animals being recorded and the ecosystems they inhabit. Minimizing disturbance should guide all collection activities, ensuring that research doesn’t harm the subjects it aims to understand.

Playback experiments that broadcast recorded calls to elicit responses can stress animals, disrupt breeding activities, or attract predators. Researchers must carefully weigh scientific benefits against potential welfare impacts, following established ethical guidelines and obtaining appropriate permits.

Data sharing practices raise additional considerations. Precise location information for endangered species could facilitate poaching if datasets fall into wrong hands. Balancing open science principles with conservation security requires thoughtful approaches like location obfuscation or restricted access protocols.

Standardization and Interoperability: Building Better Together 📊

The proliferation of vocalization datasets created by different research groups has highlighted the need for standardization. Consistent formats, metadata schemas, and labeling conventions would enhance dataset utility and enable meta-analyses across studies.

International initiatives are working toward common standards. The Acoustic Ontology developed by audio analysis communities provides controlled vocabularies for describing sound events. File format recommendations ensure recordings remain accessible as technology evolves.

| Standard Component | Purpose | Example Implementation |

|---|---|---|

| Audio Format | Ensure quality and compatibility | WAV files, 44.1kHz minimum, 16-bit depth |

| Metadata Schema | Consistent information structure | Darwin Core for biodiversity data |

| Taxonomic Authority | Standardized species names | Integrated Taxonomic Information System |

| Geographic Reference | Location precision | WGS84 coordinate system |

Repositories like the Macaulay Library, Animal Sound Archive, and specialized databases for specific taxa serve as centralized resources. These platforms implement quality standards, provide discovery tools, and ensure long-term preservation of irreplaceable recordings.

Emerging Technologies Reshaping the Field 🚀

Technological advances continue to expand possibilities for vocalization dataset development. Edge computing enables sophisticated processing on field-deployed devices, allowing real-time species identification without cloud connectivity. This capability proves invaluable in remote locations with limited infrastructure.

Acoustic sensors are becoming smaller, more affordable, and more capable. Solar-powered units can operate indefinitely, and improved battery technology extends deployment durations. Networked sensor arrays provide spatial information about vocalization sources and animal movements.

Artificial intelligence itself aids dataset creation through active learning approaches. Models trained on existing data identify uncertain classifications in new recordings, directing human expert attention to the most informative examples. This human-AI collaboration accelerates labeling while maintaining quality.

The Promise of Unsupervised Learning

Recent developments in unsupervised machine learning offer potential solutions to labeling bottlenecks. Self-supervised algorithms can discover patterns in unlabeled audio, clustering similar vocalizations without human annotation. While these approaches don’t replace expert labeling entirely, they can assist in organizing vast audio archives and identifying interesting patterns for further investigation.

Generative models trained on vocalization datasets are beginning to synthesize realistic animal sounds. Beyond their use in data augmentation, these tools might eventually help test hypotheses about animal perception and communication by generating controlled variations of natural calls.

Looking Ahead: The Future of Animal Communication Research 🔮

The field stands at an exciting threshold where accumulating labeled datasets, advancing algorithms, and improving field technology converge to enable unprecedented insights into animal communication. Future directions promise even deeper understanding of the language of the wild.

Multimodal datasets that combine vocalizations with visual observations, movement data, and physiological measurements will reveal how communication integrates with behavior and environment. Video recordings synchronized with audio provide context that enhances interpretation and enables study of visual communication components.

Longitudinal studies tracking identified individuals across seasons and years will illuminate how communication systems develop, change with experience, and vary across social contexts. These datasets require sustained commitment but offer unmatched insights into communication flexibility and cultural transmission.

Cross-species comparative approaches enabled by standardized datasets may reveal universal principles of acoustic communication. Understanding commonalities and differences across taxa could shed light on the evolutionary origins and functions of complex communication systems.

Empowering Conservation Through Sound 💚

Ultimately, building labeled datasets for animal vocalizations serves conservation goals. As biodiversity faces unprecedented threats, acoustic monitoring provides scalable, non-invasive methods for assessing ecosystem health and tracking species populations.

Early warning systems based on vocalization recognition can detect ecosystem changes before they become catastrophic. Shifts in species composition, declining population densities, and behavioral responses to disturbance all manifest in soundscape characteristics that trained models can identify.

Community engagement with vocalization recognition creates new pathways for conservation participation. When people learn to identify species by sound and contribute observations, they develop deeper connections with local wildlife and become stakeholders in protection efforts.

The journey to unlock the language of the wild requires collaboration across disciplines, integration of traditional ecological knowledge with modern technology, and sustained commitment to rigorous data collection. Every carefully labeled recording brings us closer to understanding our planet’s remarkable acoustic diversity and equips us with tools to preserve it for future generations. By building comprehensive, high-quality vocalization datasets, we create foundations for discoveries that will resonate through conservation, science, and our fundamental relationship with the natural world.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.