Vocalization technology is transforming how machines understand human speech, enabling unprecedented accuracy in audio analysis and categorization through sophisticated feature extraction techniques.

🎙️ The Revolutionary Landscape of Vocal Feature Extraction

The human voice carries an extraordinary amount of information beyond just the words spoken. Every vocalization contains unique acoustic signatures, emotional undertones, and physiological characteristics that can be decoded through advanced feature extraction methods. This intricate process has become the cornerstone of modern speech recognition, speaker identification, emotion detection, and countless other applications that rely on precise audio categorization.

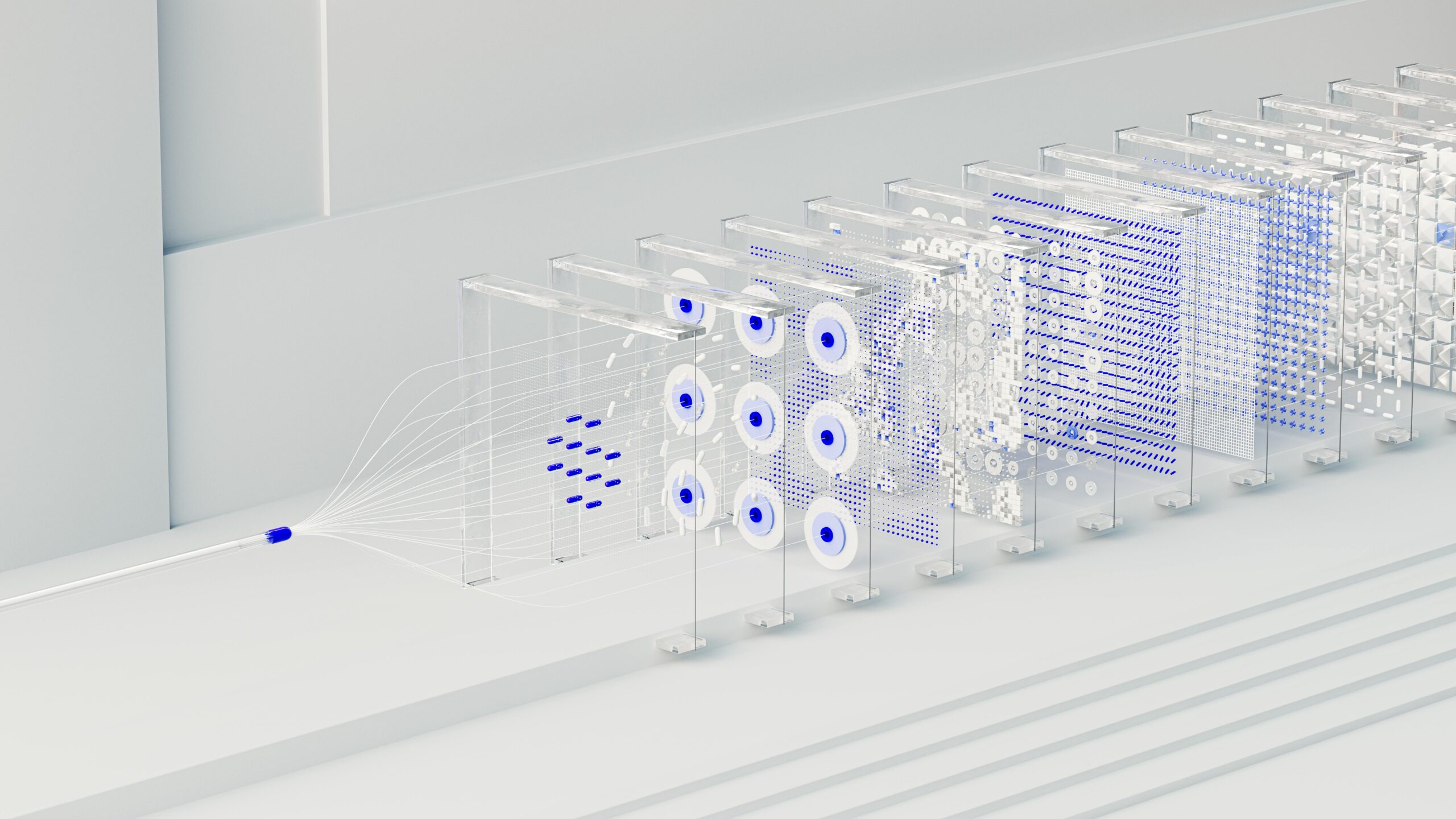

Feature extraction in vocalization analysis represents the bridge between raw acoustic signals and meaningful interpretations. By transforming complex audio waveforms into structured data points, we enable machine learning algorithms to identify patterns, classify speakers, detect emotions, and even diagnose health conditions. The sophistication of these techniques has evolved dramatically, moving from simple amplitude measurements to multi-dimensional spectral analysis that captures the subtle nuances of human speech.

Understanding the Anatomy of Vocal Features

Before diving into extraction techniques, it’s essential to understand what constitutes a vocal feature. The human vocal apparatus produces sound through a complex interplay of respiratory control, laryngeal vibration, and articulatory movements. Each component contributes distinct characteristics to the final acoustic output, creating a rich tapestry of information that can be systematically analyzed.

Fundamental frequency, commonly known as pitch, represents one of the most basic yet powerful features. This characteristic varies based on vocal fold tension and vibration rate, differing significantly between individuals and emotional states. Formants, the resonant frequencies of the vocal tract, provide critical information about vowel production and speaker identity. These spectral peaks shift as the vocal tract changes shape during speech, creating distinctive patterns for different phonemes.

Temporal and Spectral Dimensions

Vocal features exist across both temporal and spectral domains, each offering unique insights. Temporal features capture how sound characteristics change over time, including speech rate, rhythm patterns, and pause durations. These temporal dynamics reveal information about speaking style, emotional state, and even cognitive processing. Spectral features, conversely, analyze the frequency content of vocalizations, revealing the harmonic structure and energy distribution across different frequency bands.

The interplay between these dimensions creates a comprehensive acoustic fingerprint. Energy distribution patterns show how vocal intensity varies across frequencies and time, while spectral flux measures the rate of spectral change, capturing the dynamic nature of continuous speech. Zero-crossing rate, which counts how often the signal crosses the zero amplitude line, provides insights into the noisiness or periodicity of the sound.

🔬 Core Techniques for Feature Extraction

Modern vocalization analysis employs several fundamental techniques that have proven remarkably effective across diverse applications. These methods transform raw audio signals into meaningful representations that machines can process and categorize with high precision.

Mel-Frequency Cepstral Coefficients (MFCCs)

MFCCs have become the gold standard in speech and audio processing, mimicking how human auditory systems perceive sound. This technique converts audio signals into a representation that emphasizes perceptually relevant information while discarding redundant data. The process involves transforming the frequency scale to the mel scale, which better matches human frequency perception, then applying a discrete cosine transform to create compact feature vectors.

The power of MFCCs lies in their ability to capture timbral characteristics while remaining relatively speaker-independent. They excel at representing the spectral envelope of sound, making them invaluable for tasks ranging from speech recognition to music genre classification. Typically, the first 13 MFCC coefficients capture the most essential information, though this number can be adjusted based on specific application requirements.

Spectral Centroid and Spread

The spectral centroid indicates where the “center of mass” of the spectrum is located, providing a measure of spectral brightness. Higher centroid values correspond to brighter, more treble-heavy sounds, while lower values indicate darker, bass-rich timbres. This single feature can effectively distinguish between different vocal qualities and speaking styles.

Spectral spread complements the centroid by measuring how distributed the spectrum is around this center point. Together, these features provide a concise yet informative summary of the frequency content, useful for quick categorization tasks and real-time applications where computational efficiency matters.

Advanced Extraction Methodologies for Enhanced Precision

As vocalization analysis has matured, researchers have developed increasingly sophisticated extraction methods that capture subtler acoustic properties. These advanced techniques enable more nuanced categorization and deeper insights into vocal characteristics.

Linear Predictive Coding (LPC)

LPC models the vocal tract as a linear filter, predicting future sample values based on previous ones. This approach effectively separates the source signal (vocal fold vibrations) from the filter (vocal tract resonances), providing detailed information about articulatory configurations. The resulting LPC coefficients offer compact representations of spectral envelopes, particularly useful for speech synthesis and compression applications.

The predictive nature of LPC makes it especially powerful for analyzing voiced segments of speech, where periodicity dominates the signal. By focusing on the predictable components, LPC highlights the systematic variations that distinguish different phonemes and speakers, while minimizing the influence of unpredictable noise.

Wavelet Transform Analysis

Unlike traditional Fourier-based methods that provide fixed time-frequency resolution, wavelet transforms offer adaptive resolution that varies with frequency. This flexibility makes wavelets particularly effective for analyzing transient features and rapid spectral changes characteristic of consonants and voice onsets.

Wavelet decomposition breaks signals into approximation and detail coefficients at multiple scales, revealing both coarse and fine-grained temporal structures. This multi-resolution analysis captures characteristics that single-scale methods might miss, improving categorization accuracy for complex vocalization patterns.

🎯 Optimizing Feature Selection for Categorization Tasks

Extracting features represents only half the challenge; selecting the most relevant features for specific categorization tasks is equally crucial. Not all features contribute equally to classification accuracy, and including irrelevant or redundant features can actually degrade performance while increasing computational costs.

Feature selection strategies range from simple statistical measures to sophisticated machine learning approaches. Correlation analysis identifies features that strongly relate to target categories while remaining uncorrelated with each other, reducing redundancy. Information-theoretic methods like mutual information quantify how much knowing a feature value reduces uncertainty about the category, providing principled selection criteria.

Dimensionality Reduction Techniques

When dealing with high-dimensional feature spaces, dimensionality reduction becomes essential. Principal Component Analysis (PCA) transforms features into uncorrelated components ordered by variance explained, allowing retention of only the most informative dimensions. Linear Discriminant Analysis (LDA) takes a supervised approach, finding projections that maximize between-category separation while minimizing within-category variance.

These techniques not only reduce computational burden but can also improve categorization performance by eliminating noise and focusing on the most discriminative information. The optimal approach depends on dataset characteristics, computational constraints, and specific application requirements.

Real-World Applications Transforming Industries

The practical applications of vocalization feature extraction span an impressive range of industries, each leveraging these techniques to solve unique challenges and create innovative solutions.

Healthcare and Clinical Diagnostics

Medical professionals increasingly rely on vocal analysis for non-invasive diagnostic screening. Voice characteristics can reveal early signs of neurological conditions like Parkinson’s disease, where subtle changes in vocal control precede more obvious motor symptoms. Feature extraction techniques identify tremor frequencies, reduced pitch variation, and altered articulation patterns that signal disease progression.

Mental health assessment also benefits from vocal analysis, as depression, anxiety, and stress manifest in measurable acoustic changes. Reduced pitch range, slower speech rate, and altered prosody patterns serve as objective indicators complementing subjective self-reports and clinical observations.

Security and Biometric Authentication

Voice-based biometric systems provide convenient yet secure authentication by analyzing unique vocal characteristics. Unlike passwords that can be forgotten or stolen, vocal signatures derive from physiological structures that are inherently personal. Advanced feature extraction captures not just what is said but how it’s said, creating multi-layered security that’s difficult to spoof.

Modern systems combine multiple feature types—fundamental frequency patterns, formant characteristics, and prosodic elements—creating robust speaker models resistant to mimicry and environmental variations. Continuous authentication monitors ongoing vocal characteristics during conversations, detecting imposters even mid-session.

Customer Service and Sentiment Analysis

Call centers and customer service platforms employ vocal feature extraction to assess customer emotions in real-time, enabling adaptive response strategies. By detecting frustration, satisfaction, or confusion from acoustic cues, systems can route calls appropriately, alert supervisors to escalating situations, or provide agents with emotional context beyond word content.

This emotional intelligence transforms customer interactions from transactional exchanges into empathetic conversations, improving satisfaction and resolution rates. Automated analysis handles volume that would overwhelm human supervisors, ensuring consistent quality monitoring across thousands of daily interactions.

⚙️ Implementation Best Practices and Considerations

Successfully implementing vocalization feature extraction requires careful attention to several critical factors that significantly impact system performance and reliability.

Audio Preprocessing and Quality Control

Raw audio recordings rarely provide ideal input for feature extraction. Preprocessing steps—noise reduction, normalization, and segmentation—dramatically improve feature quality and extraction reliability. High-pass filtering removes low-frequency rumble, while adaptive noise cancellation minimizes background interference without distorting vocal characteristics.

Segmentation divides continuous audio into meaningful units for analysis, whether individual phonemes, words, or utterances. Accurate boundaries ensure features represent complete vocal gestures rather than artificial fragments, improving categorization consistency.

Handling Variability and Robustness

Vocal characteristics vary naturally across recording conditions, emotional states, health status, and speaking contexts. Robust systems must handle this variability without compromising categorization accuracy. Normalization techniques standardize features across different scales and distributions, while augmentation strategies during training expose models to realistic variations.

Cross-validation using diverse datasets ensures systems generalize beyond specific recording conditions or speaker populations. Testing across microphone types, acoustic environments, and demographic groups identifies weaknesses before deployment.

🚀 Emerging Trends and Future Directions

The field of vocalization feature extraction continues evolving rapidly, with several exciting trends promising even more powerful and accessible capabilities.

Deep Learning and Automatic Feature Learning

Traditional feature extraction relies on human-engineered representations based on acoustic theory. Deep learning approaches, particularly convolutional and recurrent neural networks, automatically discover optimal features directly from raw audio. These learned representations often capture subtle patterns that handcrafted features miss, pushing categorization accuracy to new heights.

Self-supervised learning techniques enable models to learn from vast amounts of unlabeled audio, developing rich representations without expensive manual annotation. Transfer learning allows pre-trained models to adapt to specialized tasks with minimal task-specific data, democratizing access to state-of-the-art performance.

Multimodal Integration

Combining vocal features with other modalities—facial expressions, physiological signals, linguistic content—creates richer, more robust categorization systems. Multimodal fusion leverages complementary information sources, with each modality providing unique perspectives that improve overall understanding.

This integration proves particularly valuable when individual modalities provide ambiguous or incomplete information. Visual cues disambiguate similar-sounding phonemes, while physiological signals reveal emotional states that vocal characteristics only hint at.

Ethical Considerations and Privacy Protection

As vocalization analysis grows more powerful and pervasive, ethical considerations become increasingly important. Voice contains deeply personal information about identity, emotional state, health, and demographic characteristics, raising significant privacy concerns.

Transparent consent mechanisms must inform users what vocal information is collected, how it’s analyzed, and for what purposes. Anonymization techniques that preserve analytical utility while protecting individual identity represent an active research area with important practical implications.

Bias in training data and feature selection can perpetuate unfair discrimination, particularly affecting underrepresented groups. Careful dataset curation, fairness-aware algorithm design, and regular bias auditing help ensure equitable system performance across diverse populations.

🎓 Building Expertise in Vocalization Analysis

Developing proficiency in feature extraction requires a multidisciplinary foundation spanning signal processing, machine learning, linguistics, and domain-specific knowledge. Academic programs increasingly offer specialized coursework combining theoretical foundations with practical implementation skills.

Open-source libraries and frameworks have dramatically lowered the barrier to entry, enabling rapid prototyping and experimentation. Python ecosystems like librosa, scipy, and scikit-learn provide comprehensive toolkits for audio processing and machine learning, while TensorFlow and PyTorch enable deep learning approaches.

Community resources—tutorials, competitions, and collaborative projects—accelerate learning through hands-on experience with real-world challenges. Kaggle competitions and shared datasets provide benchmarks for comparing approaches and identifying best practices.

Maximizing Impact Through Strategic Implementation

Organizations seeking to leverage vocalization feature extraction should approach implementation strategically, starting with clearly defined objectives and success metrics. Proof-of-concept projects on focused problems build confidence and demonstrate value before scaling to enterprise-wide deployment.

Cross-functional teams combining domain experts, data scientists, and engineers ensure technical solutions address real business needs while remaining practically feasible. Iterative development with continuous user feedback refines systems to meet actual usage patterns rather than theoretical requirements.

Investment in data infrastructure—collection pipelines, storage systems, annotation tools—pays dividends across multiple projects and enables rapid experimentation with new approaches. Quality data represents the foundation upon which all effective vocalization analysis systems are built.

The transformative potential of vocalization feature extraction continues expanding as techniques mature and applications proliferate. From enhancing human-computer interaction to enabling breakthrough medical diagnostics, the precise categorization of vocal characteristics is reshaping how we understand and utilize the richest communication channel humans possess. Organizations and individuals who master these techniques position themselves at the forefront of the audio intelligence revolution, unlocking insights previously hidden within the complex acoustic tapestry of human vocalization.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.