Data tracking uncertainty is one of the most critical yet overlooked challenges facing modern businesses, threatening the integrity of decisions worth millions of dollars annually.

🔍 Why Tracking Uncertainty Matters More Than Ever

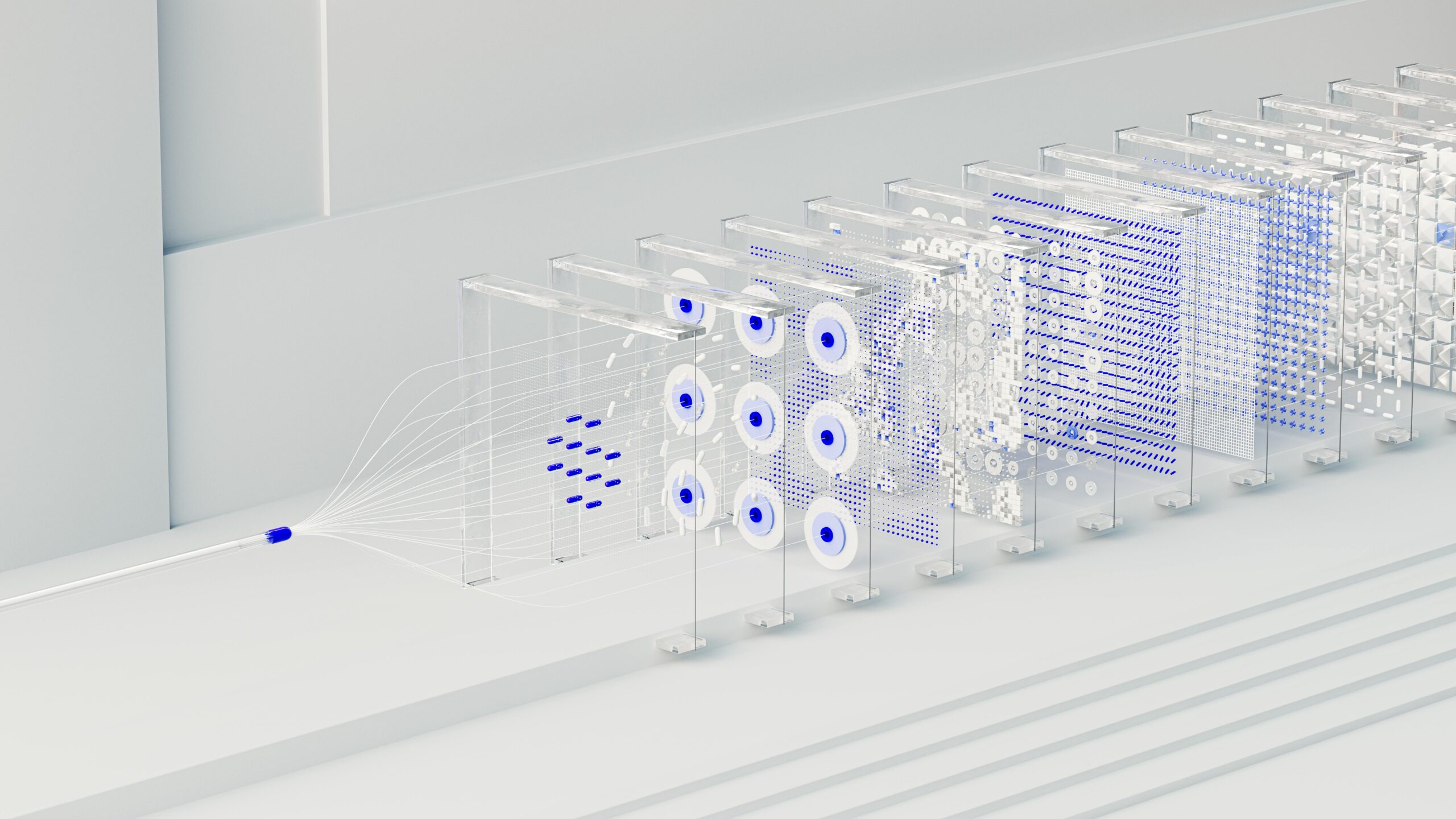

In today’s data-driven landscape, organizations rely heavily on analytics to guide strategic decisions. However, the foundation of these decisions—the data itself—is often plagued with inconsistencies, gaps, and inaccuracies that create what experts call “tracking uncertainty.” This phenomenon represents the degree of doubt surrounding the accuracy and reliability of collected data points.

Tracking uncertainty manifests in multiple ways: from missing event triggers and duplicate entries to misattributed conversions and privacy-related data loss. As businesses invest more heavily in data infrastructure, understanding and mitigating this uncertainty becomes paramount to maintaining competitive advantage.

The cost of ignoring tracking uncertainty extends beyond flawed reports. It erodes stakeholder confidence, leads to misallocated budgets, and can result in strategic missteps that compound over time. Marketing teams might optimize campaigns based on incomplete attribution data, while product managers could prioritize features based on distorted user behavior patterns.

📊 Common Sources of Tracking Uncertainty

Understanding where uncertainty originates is the first step toward addressing it effectively. Most tracking problems stem from a combination of technical limitations, implementation errors, and environmental factors beyond direct control.

Technical Implementation Challenges

Improperly configured tracking codes represent one of the most prevalent sources of uncertainty. When analytics tags fire inconsistently or fail to capture essential parameters, the resulting data gaps create blind spots in your analysis. JavaScript errors, timing issues, and race conditions can prevent tracking scripts from executing properly, especially on complex web applications with dynamic content loading.

Integration problems between different platforms compound these issues. When your CRM doesn’t properly sync with your analytics platform, or when marketing automation tools send conflicting data points, reconciling these discrepancies becomes a time-consuming challenge that introduces additional uncertainty.

Browser and Privacy-Related Limitations

The evolving privacy landscape has fundamentally altered data collection capabilities. Intelligent Tracking Prevention (ITP) in Safari, Enhanced Tracking Protection in Firefox, and similar features in other browsers actively block or limit tracking mechanisms. Cookie consent requirements under GDPR and similar regulations mean that significant portions of your audience may opt out of tracking entirely.

Ad blockers further reduce data completeness, with some estimates suggesting 25-40% of internet users employ these tools. This creates a systematic bias in your data, as the blocked users likely exhibit different behaviors than those who don’t use ad blockers.

Cross-Device and Cross-Platform Fragmentation

Modern customer journeys rarely occur on a single device or platform. Users might discover your brand on mobile, research on desktop, and convert via tablet. Without robust cross-device tracking, these touchpoints appear as separate users, inflating your audience counts while deflating conversion rates and distorting attribution models.

App-to-web transitions present particularly thorny challenges. When users move from your mobile application to your website or vice versa, maintaining consistent user identification requires sophisticated technical implementation that many organizations struggle to achieve.

🎯 Quantifying Your Tracking Uncertainty

Before you can reduce uncertainty, you need to measure it. Establishing baseline metrics for data quality enables you to track improvements over time and justify investments in tracking infrastructure.

Data Completeness Audits

Start by examining what percentage of expected events are actually being captured. Create a matrix of critical user actions—page views, button clicks, form submissions, purchases—and verify that each generates the expected tracking events. Run controlled tests where you perform specific actions and verify that every step appears correctly in your analytics platform.

Calculate your data capture rate for each critical event type. If you expect 1,000 form submissions based on server logs but only see 850 in your analytics, you have a 15% data loss rate for that event. Document these gaps systematically across all tracking points.

Cross-Platform Validation

Compare data across multiple sources to identify discrepancies. Your payment processor knows exactly how many transactions occurred, providing a gold standard against which to measure your analytics data. Similarly, server logs offer an independent verification source for many web-based events.

When discrepancies appear—and they will—investigate whether the differences stem from legitimate filtering (like bot traffic exclusion) or represent genuine tracking problems. Create a reconciliation document that explains expected variances and flags unexpected ones for investigation.

Attribution Confidence Scoring

Not all attributed conversions deserve equal confidence. A conversion that occurred seconds after clicking an ad deserves higher confidence than one attributed to an impression from three weeks prior. Develop a confidence scoring system that weights conversions based on factors like time decay, interaction type, and available touchpoint data.

This approach acknowledges uncertainty explicitly rather than treating all conversions as equally reliable. It enables more nuanced decision-making where high-confidence conversions receive appropriate weight in optimization algorithms.

🛠️ Practical Strategies to Reduce Tracking Uncertainty

Once you’ve quantified existing uncertainty, implement systematic improvements to enhance data reliability. These strategies span technical implementation, organizational processes, and analytical approaches.

Implementing Server-Side Tracking

Server-side tracking represents one of the most effective methods to reduce browser-related uncertainty. By processing tracking requests through your own servers before forwarding to analytics platforms, you gain greater control and reliability. Server-side implementations aren’t affected by ad blockers, execute more reliably, and provide opportunities for data enrichment before transmission.

Google Tag Manager Server-Side and similar solutions enable you to maintain robust tracking even as browser restrictions tighten. While implementation requires more technical expertise than client-side tracking, the data quality improvements justify the investment for organizations serious about analytics accuracy.

Establishing Data Quality Monitoring

Automated monitoring systems alert you immediately when tracking breaks, minimizing the duration of data gaps. Configure alerts for sudden drops in event volume, unexpected changes in traffic patterns, or critical events failing to fire. These early warning systems prevent small problems from becoming large data gaps.

Build dashboards specifically focused on data quality metrics rather than business metrics. Track daily event volumes, error rates, and completion rates for multi-step processes. Review these dashboards regularly to catch subtle degradation before it significantly impacts decision-making.

Developing a Measurement Plan

A comprehensive measurement plan documents exactly what should be tracked, how it should be implemented, and what each data point means. This living document serves as the single source of truth for your tracking implementation, reducing confusion and preventing tracking drift over time.

Include specific technical requirements for each tracked event: what parameters should be included, what values are acceptable, and how the event should be triggered. When developers reference this plan during implementation, tracking consistency improves dramatically.

📈 Building Confidence Through Statistical Approaches

Even with perfect tracking implementation—an impossible ideal—statistical uncertainty remains inherent in data analysis. Acknowledging and quantifying this uncertainty actually increases confidence in your conclusions by providing appropriate context.

Confidence Intervals Over Point Estimates

Instead of reporting that your conversion rate is exactly 3.2%, express it as “between 2.9% and 3.5% with 95% confidence.” This more accurate representation acknowledges sampling uncertainty and prevents overconfidence in minor fluctuations. When trends fall within expected confidence intervals, you avoid the mistake of reacting to random noise as if it were a meaningful signal.

Modern analytics tools increasingly support confidence interval visualization, making this approach more accessible than ever. Configure your reporting dashboards to display uncertainty ranges alongside point estimates.

Bayesian Approaches to Uncertainty

Bayesian statistical methods explicitly incorporate prior knowledge and update beliefs based on new evidence. This framework naturally handles uncertainty by expressing conclusions as probability distributions rather than fixed values. When evaluating whether a new feature improved engagement, Bayesian analysis might conclude “there’s an 87% probability the feature increased engagement by 5-15%.”

This probabilistic thinking aligns better with business decision-making than traditional hypothesis testing. Stakeholders can weigh the probability of success against implementation costs to make informed choices even when data doesn’t provide absolute certainty.

Sensitivity Analysis for Key Decisions

Before making important decisions based on data, conduct sensitivity analyses to understand how different assumptions affect your conclusions. If your decision depends on the relative performance of two channels, test how your conclusion changes if tracking capture rates differ by 10%, 20%, or 30% between channels.

When conclusions remain stable across reasonable uncertainty ranges, confidence increases appropriately. When small assumption changes flip your conclusion, you’ve identified a decision that requires additional data gathering before proceeding.

🤝 Fostering Organizational Data Literacy

Technical solutions only succeed when embedded within an organization that understands and values data quality. Building broad data literacy ensures that uncertainty considerations inform decisions at all levels.

Educating Stakeholders About Limitations

Combat the misconception that analytics provide perfect information. Regularly communicate known limitations, recent tracking issues, and the inherent uncertainty in metrics. When stakeholders understand these constraints, they make better decisions and avoid over-interpreting noisy data.

Create accessible documentation explaining what each metric actually measures, what it doesn’t capture, and what level of precision is reasonable to expect. A metric labeled “conversions” might exclude certain transaction types or include test purchases—clarifying these details prevents misinterpretation.

Establishing Data Governance Processes

Formal governance processes prevent tracking implementations from degrading over time. Require that any new feature or page includes tracking specifications reviewed by analytics specialists before deployment. Institute regular audits of existing tracking to catch drift and decay.

Designate clear ownership for different aspects of your analytics ecosystem. When specific individuals are accountable for data quality in their domains, issues get addressed more promptly and comprehensively.

💡 Communicating Uncertainty Without Undermining Confidence

There’s an art to acknowledging uncertainty while still projecting appropriate confidence in data-driven recommendations. The goal isn’t to paralyze decision-making with caveats but to right-size confidence to match actual data quality.

The Tiered Recommendation Approach

Present recommendations with confidence tiers based on data quality and statistical significance. High-confidence recommendations backed by robust data merit immediate action, while medium-confidence insights might warrant small-scale testing, and low-confidence hypotheses could guide future research priorities.

This framework acknowledges uncertainty while still providing actionable direction. Stakeholders appreciate the honesty and can allocate resources appropriately across the confidence spectrum.

Visualization Techniques for Uncertainty

Visual representations of uncertainty help non-technical audiences grasp concepts that might otherwise seem abstract. Error bars, shaded confidence regions, and animated visualizations showing sampling variation all make uncertainty tangible and understandable.

Avoid visualizations that imply false precision. Line charts with smooth curves suggest more certainty than typically warranted; consider scatter plots or stepped lines that better represent the discrete, uncertain nature of real data.

🚀 Advanced Techniques for Sophisticated Teams

Organizations with mature analytics practices can implement advanced approaches to push tracking uncertainty even lower while building sophisticated models of remaining uncertainty.

Machine Learning for Anomaly Detection

Machine learning models trained on historical data can automatically identify tracking anomalies that human analysts might miss. These systems learn normal patterns for different metrics and flag deviations that likely indicate tracking problems rather than genuine business changes.

Automated anomaly detection enables faster response to tracking breaks while reducing the burden on analysts who would otherwise need to manually monitor hundreds of metrics.

Probabilistic Data Matching

When deterministic user identification fails—due to privacy restrictions or technical limitations—probabilistic matching algorithms can estimate which events likely belong to the same user. These approaches acknowledge uncertainty explicitly, assigning probability scores to each potential match rather than forcing binary same-user or different-user decisions.

While not perfect, probabilistic matching recovers valuable insights that would otherwise be lost to fragmentation while quantifying the uncertainty introduced by the matching process.

🎓 Creating a Culture of Continuous Improvement

Reducing tracking uncertainty isn’t a one-time project but an ongoing commitment requiring sustained attention and resources. Organizations that treat data quality as a continuous improvement process rather than a problem to be solved once achieve superior results over time.

Institute regular retrospectives where teams review data quality issues encountered during recent analyses. What uncertainties affected important decisions? Which tracking gaps caused the most confusion? Use these insights to prioritize infrastructure improvements and process changes.

Celebrate wins when tracking improvements enable better decisions. When a server-side implementation recovers 15% of previously lost conversions, recognize the team responsible and communicate the business impact broadly. This positive reinforcement sustains momentum for data quality initiatives.

Invest in training and tools that empower team members to identify and address tracking issues independently. The more people who can diagnose and fix common problems, the faster issues get resolved and the higher your overall data quality.

🎯 Measuring Success in Uncertainty Reduction

Track your progress in reducing uncertainty using concrete metrics. Monitor the percentage of critical events successfully captured, the average time to detect and resolve tracking issues, and the degree of alignment between different data sources measuring the same phenomena.

Survey stakeholders regularly about their confidence in data quality and their understanding of tracking limitations. Improvements in these subjective measures indicate that your educational and technical efforts are succeeding in building appropriate confidence.

Document case studies where acknowledging and addressing uncertainty led to better decisions. Perhaps sensitivity analysis prevented a costly strategic mistake, or confidence intervals helped you avoid over-reacting to random fluctuations. These stories demonstrate the tangible value of treating uncertainty seriously.

The journey toward better tracking and appropriate confidence never truly ends, but each step forward compounds previous gains. Organizations that embrace uncertainty as a manageable challenge rather than an uncomfortable truth position themselves to extract maximum value from their data while avoiding the pitfalls of false precision. Your competitive advantage increasingly depends not just on having data, but on truly understanding and trusting that data at the appropriate level of confidence.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.