Modern data fusion techniques are revolutionizing how organizations capture, analyze, and interpret information by combining acoustic and visual tracking systems into powerful analytical frameworks.

🔊 The Revolution of Multi-Sensory Data Collection

In an increasingly connected world, the ability to gather comprehensive data from multiple sources has become paramount for businesses, researchers, and security professionals. Acoustic and visual tracking technologies have evolved independently for decades, but their true potential emerges when these data streams converge through sophisticated fusion techniques.

Visual tracking systems capture spatial information, movements, and environmental context through cameras and image sensors. Meanwhile, acoustic tracking relies on microphones and sound analysis to detect patterns, identify sources, and measure audio characteristics. When combined, these complementary technologies create a holistic understanding that neither could achieve alone.

The synergy between sight and sound mirrors human perception itself. Just as we naturally integrate what we see and hear to understand our surroundings, data fusion systems leverage both modalities to overcome individual limitations and enhance overall accuracy.

🎯 Core Principles Behind Effective Data Fusion

Data fusion operates on several fundamental principles that determine its effectiveness. The first principle involves temporal synchronization—ensuring that acoustic and visual data streams align precisely in time. Without proper synchronization, the system cannot correlate sounds with their visual sources accurately.

Spatial calibration represents another critical foundation. Visual and acoustic sensors must share a common coordinate system, allowing the system to map sound sources to physical locations captured by cameras. This spatial alignment enables precise localization and tracking of objects or individuals.

The third principle centers on complementarity. Effective fusion architectures recognize that acoustic and visual data provide different but complementary information. Visual data excels in spatial resolution and object identification, while acoustic data penetrates obstacles and provides directional information even in visually occluded environments.

Levels of Integration in Multi-Modal Systems

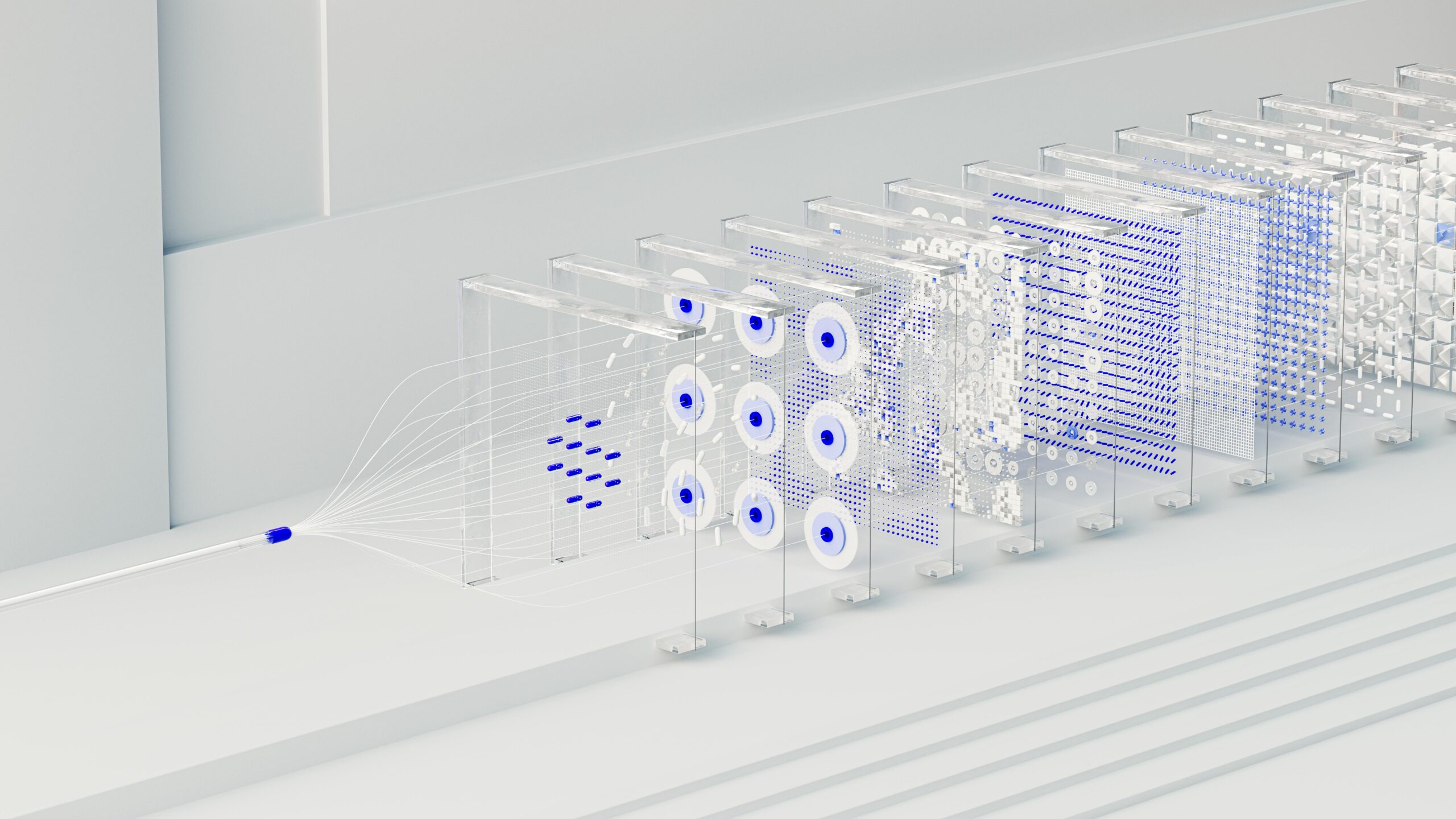

Data fusion occurs at different levels depending on the application requirements and system architecture. Low-level fusion combines raw sensor data before feature extraction, offering maximum information preservation but requiring significant computational resources.

Mid-level fusion processes each modality separately to extract features, then combines these features for decision-making. This approach balances computational efficiency with information richness, making it popular in real-time applications.

High-level fusion makes independent decisions from each sensor modality, then combines these decisions through voting schemes or probabilistic methods. While computationally efficient, this approach may discard valuable correlations between modalities.

📊 Applications Transforming Industries

The practical applications of acoustic and visual tracking fusion span numerous sectors, each leveraging the technology’s unique capabilities to solve specific challenges and unlock new possibilities.

Smart Surveillance and Security Systems

Security operations have been transformed by fusion systems that detect both visual anomalies and acoustic signatures. A camera might identify a person approaching a restricted area while microphones detect unusual sounds like breaking glass or raised voices. The combined analysis provides security personnel with comprehensive situational awareness.

Modern surveillance systems can track individuals across multiple cameras while maintaining acoustic continuity, even when visual tracking is temporarily lost due to occlusions. The acoustic signature helps reestablish visual tracking when the subject reemerges.

Wildlife Conservation and Environmental Monitoring

Conservation biologists deploy acoustic and visual sensors to monitor wildlife populations without human presence. Cameras capture visual evidence of species presence and behavior, while acoustic sensors detect vocalizations, often identifying species that are visually camouflaged or nocturnal.

This dual approach provides richer ecological data. Researchers can correlate visual observations of animal behavior with acoustic patterns, understanding how different species interact and respond to environmental changes. The fusion of these data types has revealed previously unknown behavioral patterns and population dynamics.

Autonomous Vehicles and Robotics

Self-driving vehicles increasingly rely on sensor fusion to navigate safely. Visual cameras identify road markings, traffic signs, and obstacles, while acoustic sensors detect emergency vehicle sirens, horns, and other auditory warnings that might not be immediately visible.

Robotic systems operating in complex environments use similar fusion strategies. A warehouse robot might use cameras to navigate visually while acoustic sensors detect approaching forklifts or warning signals, enabling safer operation in shared spaces.

Healthcare and Assisted Living

Healthcare facilities implement fusion systems to monitor patient safety and well-being. Cameras track patient movement and potential falls, while acoustic monitoring detects calls for help, irregular breathing patterns, or other auditory indicators of distress.

In assisted living environments, these systems provide elderly residents with independence while ensuring rapid response to emergencies. The technology respects privacy better than constant visual monitoring alone, relying on acoustic patterns to trigger visual verification only when necessary.

🛠️ Technical Architectures and Methodologies

Implementing effective fusion systems requires careful architectural decisions and appropriate methodologies for combining disparate data types into coherent insights.

Machine Learning Approaches

Modern fusion systems increasingly leverage machine learning algorithms that automatically learn optimal combination strategies from training data. Deep neural networks can process raw acoustic and visual inputs simultaneously, learning complex cross-modal correlations.

Convolutional neural networks excel at processing visual data, while recurrent networks handle temporal acoustic patterns. Fusion architectures often combine these specialized networks, allowing each to extract relevant features before integration.

Transfer learning techniques enable systems trained on one fusion task to adapt quickly to related applications, reducing the data requirements and training time for new deployments.

Probabilistic Fusion Frameworks

Bayesian approaches provide rigorous mathematical foundations for data fusion, treating sensor measurements as evidence that updates probability distributions over possible states. This framework naturally handles uncertainty and sensor reliability.

Kalman filters and particle filters extend these concepts to tracking applications, maintaining probability distributions over object locations and velocities based on continuous acoustic and visual measurements.

⚡ Overcoming Implementation Challenges

Despite its tremendous potential, acoustic and visual data fusion presents several technical and practical challenges that implementers must address.

Sensor Calibration and Synchronization

Achieving precise spatial and temporal alignment between acoustic and visual sensors remains technically demanding. Cameras and microphones have different sampling rates, latencies, and coordinate systems that must be carefully calibrated.

Environmental factors complicate calibration. Acoustic propagation varies with temperature, humidity, and obstacles, while visual tracking contends with lighting changes and optical distortions. Robust systems must account for these dynamic factors.

Computational Resource Requirements

Processing multiple high-resolution data streams simultaneously demands substantial computational power. Real-time applications must balance processing complexity against latency requirements, often requiring specialized hardware accelerators or cloud computing resources.

Edge computing architectures address this challenge by distributing processing between local sensors and centralized servers. Initial processing occurs at the sensor level, with only relevant features or events transmitted for centralized fusion and analysis.

Privacy and Ethical Considerations

The comprehensive monitoring capabilities of fusion systems raise important privacy concerns. Organizations deploying these technologies must implement appropriate safeguards, including data encryption, access controls, and transparent policies about data usage and retention.

Privacy-preserving techniques like on-device processing and anonymization help balance utility with ethical obligations. Systems can extract relevant behavioral patterns without retaining identifiable visual or voice characteristics.

🚀 Emerging Trends and Future Directions

The field of multi-modal data fusion continues evolving rapidly, with several emerging trends promising to expand capabilities and applications.

Integration of Additional Sensory Modalities

Future systems will incorporate thermal imaging, radar, LiDAR, and chemical sensors alongside acoustic and visual tracking. This expansion toward comprehensive environmental sensing will enable even richer situational understanding.

The fusion of more modalities introduces complexity but also robustness. When one sensor modality fails or provides ambiguous information, others can compensate, maintaining system performance across diverse conditions.

Adaptive and Context-Aware Fusion

Next-generation systems will dynamically adjust fusion strategies based on context and performance. In good lighting, visual tracking might dominate, while acoustic cues become more important in darkness or visually cluttered environments.

Machine learning systems will continuously evaluate sensor reliability and adjust fusion weights accordingly, optimizing performance without manual reconfiguration.

Miniaturization and Ubiquitous Deployment

Advances in sensor technology and low-power processing enable smaller, more affordable fusion systems. This miniaturization will facilitate widespread deployment in consumer devices, wearables, and Internet of Things applications.

Smart homes, cities, and industrial facilities will leverage ubiquitous acoustic and visual sensing to optimize operations, enhance safety, and provide personalized services.

💡 Best Practices for Successful Implementation

Organizations planning to implement acoustic and visual fusion systems should follow established best practices to maximize success and return on investment.

Start with Clear Objectives

Define specific problems the fusion system should solve before selecting technologies. Clear objectives guide sensor selection, fusion architecture choices, and performance metrics.

Pilot projects in controlled environments help validate approaches before full-scale deployment. These pilots reveal practical challenges and allow iterative refinement of algorithms and hardware configurations.

Invest in Quality Data Collection

Fusion system performance depends critically on input data quality. Invest in appropriate sensors with specifications matched to application requirements. High-quality microphones and cameras with appropriate resolution, frame rates, and sensitivities form the foundation of effective systems.

Regular calibration and maintenance ensure sustained performance. Environmental changes, sensor drift, and physical disturbances can degrade calibration over time, requiring periodic recalibration procedures.

Build Interdisciplinary Teams

Successful fusion projects require expertise spanning signal processing, computer vision, machine learning, and domain-specific knowledge. Interdisciplinary teams understand both technical capabilities and application requirements, bridging the gap between possibility and practical utility.

🌐 The Transformative Impact on Decision-Making

Beyond technical capabilities, acoustic and visual data fusion fundamentally transforms how organizations make decisions by providing richer, more reliable information.

Traditional single-modality systems force decision-makers to work with incomplete pictures of situations. Fusion systems reduce uncertainty, enabling more confident and timely decisions across security, operations, and strategic planning.

The technology democratizes access to sophisticated analytical capabilities. What once required extensive manual observation and analysis now happens automatically, freeing human experts to focus on high-level interpretation and response rather than basic information gathering.

In emergency response scenarios, fusion systems provide first responders with comprehensive situational awareness before arrival, improving safety and effectiveness. Security personnel receive both visual context and acoustic alerts, understanding not just what is happening but also the complete sensory environment.

🔮 Building Tomorrow’s Intelligent Environments

The ultimate vision for acoustic and visual data fusion extends beyond specific applications to create truly intelligent environments that perceive, understand, and respond to human needs seamlessly.

Smart buildings will adjust lighting, temperature, and acoustics based on occupancy patterns and activities detected through fusion systems. Conference rooms will automatically configure audiovisual systems when meetings begin, while manufacturing facilities will anticipate equipment failures through abnormal acoustic and visual signatures.

Transportation infrastructure will coordinate traffic signals, pedestrian crossings, and emergency vehicle routing based on comprehensive acoustic and visual monitoring of traffic patterns and incidents.

These intelligent environments will operate unobtrusively, respecting privacy while enhancing safety, efficiency, and quality of life. The power of data fusion lies not in surveillance but in creating responsive spaces that adapt to human needs.

As acoustic and visual tracking technologies continue maturing and fusion methodologies become more sophisticated, the boundary between possibility and reality continues shifting. Organizations embracing these technologies today position themselves at the forefront of a transformation that will define how we interact with our physical environments for decades to come.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.