Optimizing performance in data acquisition systems requires careful consideration of sampling rates and storage limitations, two factors that directly impact system efficiency and data quality.

🎯 Understanding the Fundamental Trade-Off

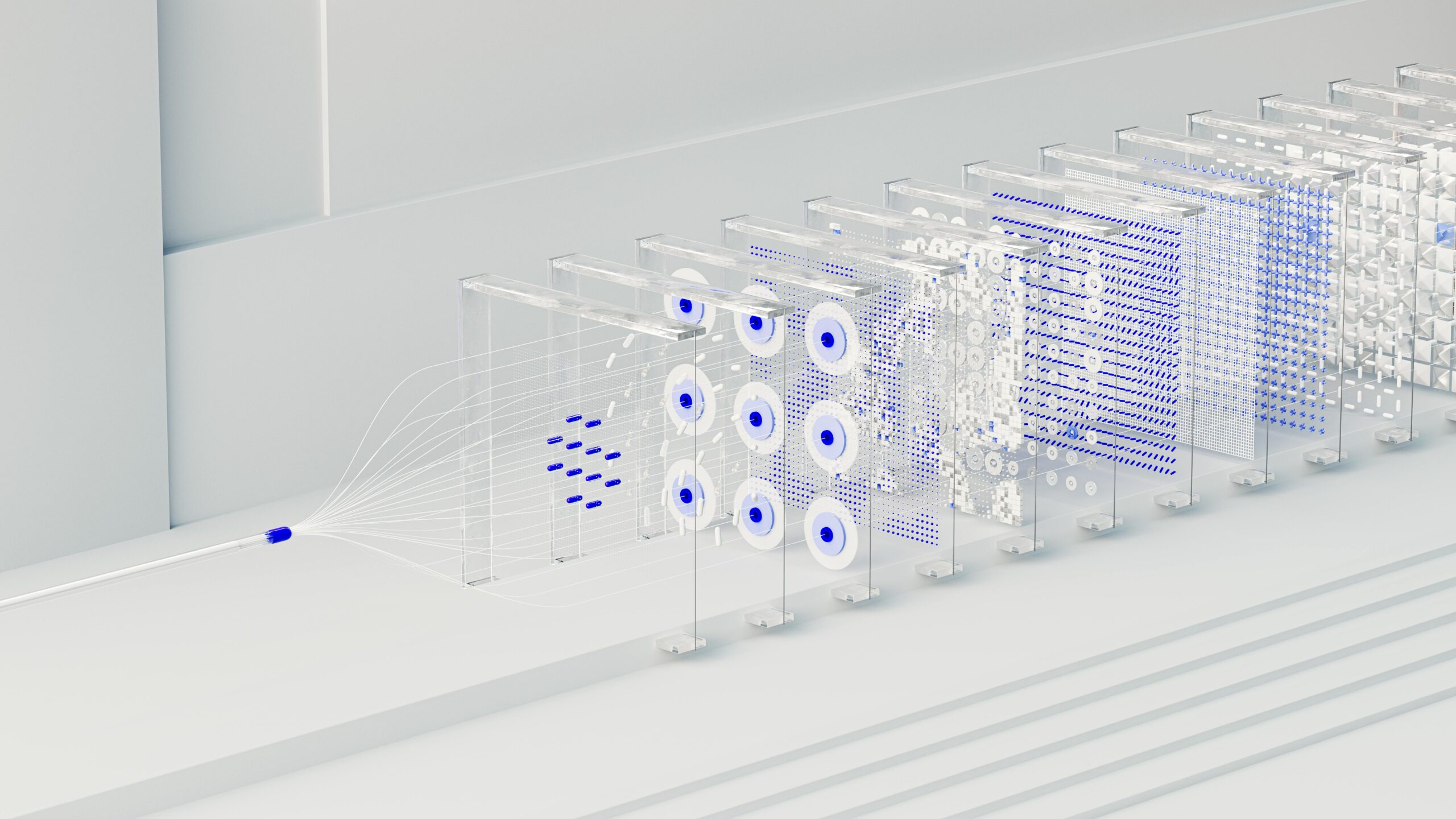

Every engineer working with data acquisition systems faces a critical challenge: balancing the need for high-quality data capture against the practical limitations of storage capacity. This challenge exists across numerous industries, from audio recording and scientific instrumentation to IoT sensors and industrial monitoring systems. The sampling rate determines how frequently measurements are taken, while storage constraints define how much data can be retained over time.

The relationship between these two parameters is inversely proportional to system sustainability. Higher sampling rates generate more data points, providing better temporal resolution and the ability to capture rapid signal changes. However, this comes at the cost of increased storage consumption, processing requirements, and potential bandwidth limitations. Understanding this fundamental trade-off is essential for designing efficient systems that meet performance objectives without overwhelming infrastructure resources.

📊 The Mathematics Behind Sampling and Storage

The Nyquist-Shannon sampling theorem establishes that to accurately reconstruct a signal, the sampling rate must be at least twice the highest frequency component present in that signal. This critical threshold, known as the Nyquist rate, represents the theoretical minimum for faithful signal reproduction. Sampling below this rate introduces aliasing, where high-frequency components masquerade as lower frequencies, corrupting the data irreversibly.

Storage requirements scale linearly with sampling rate and recording duration. A simple calculation illustrates this relationship: a single-channel system sampling at 1 kHz with 16-bit resolution generates approximately 2 kilobytes per second, or 172.8 megabytes per day. Multiply this by multiple channels or increase the sampling rate tenfold, and storage demands quickly become substantial.

The formula for calculating storage requirements is straightforward:

Storage (bytes) = Sampling Rate (Hz) × Bit Depth (bits) / 8 × Number of Channels × Duration (seconds)

This equation becomes the foundation for capacity planning, helping engineers predict infrastructure needs and identify potential bottlenecks before deployment.

🔍 Signal Characteristics and Sampling Requirements

Not all signals require identical sampling rates. The nature of the measured phenomenon dictates appropriate sampling strategies. Slowly varying parameters like ambient temperature or soil moisture may need sampling only once per minute or even hourly, while vibration analysis or acoustic measurements demand rates in the kilohertz or megahertz range.

Understanding signal bandwidth is paramount. A signal containing frequency components up to 100 Hz theoretically requires sampling at 200 Hz minimum. However, practical implementations typically use oversampling factors of 2.5 to 10 times the Nyquist rate to account for anti-aliasing filter roll-off characteristics and to provide margin against signal variability.

Common Application Sampling Benchmarks

- Environmental Monitoring: 0.1 Hz to 1 Hz (temperature, humidity, pressure)

- Human Speech: 8 kHz to 16 kHz (telephony and basic voice recording)

- Music Recording: 44.1 kHz to 192 kHz (consumer to professional audio)

- Ultrasonic Testing: 1 MHz to 100 MHz (material inspection)

- Vibration Analysis: 10 kHz to 100 kHz (machinery condition monitoring)

- ECG Monitoring: 250 Hz to 1 kHz (cardiac signal acquisition)

💾 Storage Technologies and Their Implications

The choice of storage technology significantly influences system design and performance optimization strategies. Different storage media offer varying trade-offs between capacity, speed, cost, reliability, and power consumption. Understanding these characteristics helps engineers select appropriate solutions for their specific applications.

Flash memory, including SD cards and solid-state drives, provides excellent random access performance with no moving parts, making it ideal for portable and embedded applications. However, write endurance limitations require careful consideration for continuous high-rate logging applications. Modern flash memory typically handles millions of write cycles, but at high sampling rates, this limit can be reached within months or years.

Hard disk drives offer superior capacity-to-cost ratios and essentially unlimited write endurance for practical purposes. However, they consume more power, generate heat, and are sensitive to vibration and shock—considerations that matter in mobile or harsh environment deployments.

Cloud storage represents an increasingly viable option for applications with network connectivity. By offloading data to remote servers, local storage constraints become less limiting. However, this approach introduces dependencies on bandwidth availability, latency considerations, and ongoing operational costs.

🎚️ Adaptive Sampling Strategies

Rather than maintaining constant sampling rates regardless of signal activity, adaptive sampling techniques adjust acquisition parameters dynamically based on signal characteristics. This intelligent approach optimizes the performance-storage balance by allocating resources where they provide maximum value.

Event-driven sampling activates high-rate data capture only when specific conditions are met, such as when signal amplitude exceeds a threshold or when particular patterns are detected. Between events, the system operates at reduced rates or enters standby mode, dramatically reducing average data volumes while ensuring critical transients are captured with full fidelity.

Level-crossing sampling represents another adaptive approach where samples are taken only when the signal changes by a predetermined amount rather than at fixed time intervals. This technique proves particularly effective for slowly varying signals with occasional rapid changes, capturing dynamics efficiently while minimizing redundant data during stable periods.

Implementing Multi-Rate Architectures

Multi-rate systems employ different sampling rates for different channels or parameters based on their individual requirements. A structural health monitoring system might sample accelerometers at 10 kHz to capture vibration dynamics while measuring strain gauges at 100 Hz and temperature sensors at 0.1 Hz. This tailored approach prevents over-sampling slow signals and under-sampling fast signals, optimizing overall system efficiency.

📉 Data Compression and Reduction Techniques

Compression algorithms reduce storage requirements by eliminating redundancy in captured data. Lossless compression techniques, such as those based on dictionary encoding or differential coding, preserve every detail of the original signal while achieving moderate compression ratios typically between 1.5:1 and 3:1 for scientific data.

Lossy compression accepts controlled degradation in signal fidelity to achieve higher compression ratios. Audio codecs like MP3 and AAC demonstrate this approach, delivering 10:1 compression or greater by discarding psychoacoustically irrelevant information. In scientific applications, lossy compression requires careful validation to ensure that information relevant to analysis objectives remains intact.

Edge processing and feature extraction represent alternative data reduction strategies. Instead of storing raw samples, algorithms extract relevant parameters or features from the signal stream. A vibration monitoring system might compute and store frequency spectra or statistical measures rather than retaining time-domain waveforms, reducing data volumes by orders of magnitude while preserving diagnostic information.

⚖️ Balancing Real-Time Requirements and Storage

Real-time systems impose additional constraints beyond simple sampling and storage considerations. Processing latency, deterministic response times, and continuous data flow requirements influence architecture decisions and optimization strategies.

Buffering mechanisms help manage the mismatch between continuous data acquisition and discontinuous storage operations. Circular buffers temporarily hold incoming samples, allowing storage subsystems to write data in efficient blocks rather than individual samples. Buffer sizing requires careful calculation to prevent overflow during worst-case storage latency periods.

Dual-buffer or ping-pong architectures enable simultaneous acquisition and storage operations. While one buffer fills with incoming samples, the system writes the other buffer’s contents to storage. This approach maximizes throughput and minimizes the risk of data loss, though it doubles memory requirements.

🔧 Practical Optimization Workflow

Optimizing the sampling rate and storage balance follows a systematic methodology that begins with defining system requirements and constraints. This process ensures that technical decisions align with application objectives and available resources.

First, characterize the signals of interest. Determine their frequency content through spectral analysis of representative data. Identify the highest frequency components that contain relevant information for your application. This analysis establishes the minimum sampling rate according to Nyquist criteria.

Second, calculate storage requirements based on identified sampling rates, number of channels, bit depth, and required recording duration. Compare these requirements against available storage capacity, factoring in overhead for file systems, metadata, and safety margins.

Third, identify optimization opportunities. Can adaptive sampling reduce average data rates? Would compression provide sufficient space savings without compromising analysis quality? Could edge processing extract essential information, eliminating the need to store raw data?

Fourth, prototype and validate the optimized configuration. Verify that captured data meets quality standards for intended analyses. Confirm that storage capacity suffices for expected operating periods. Test system behavior under worst-case conditions to ensure reliability.

🌐 Industry-Specific Considerations

Different industries face unique challenges that influence how they approach the sampling-storage optimization problem. Understanding these domain-specific requirements helps tailor solutions effectively.

In healthcare applications, regulatory requirements often mandate specific sampling rates and data retention policies. ECG monitors must sample at rates sufficient to capture cardiac dynamics accurately while storing data for legally specified periods. Privacy concerns may require local storage rather than cloud solutions, constraining capacity options.

Industrial IoT deployments typically involve numerous distributed sensors with limited local storage and intermittent connectivity. Edge intelligence becomes critical, with devices preprocessing data locally and transmitting only aggregated results or anomaly reports to central systems. This architecture minimizes bandwidth and storage requirements while maintaining operational visibility.

Scientific instrumentation prioritizes data quality and completeness above storage efficiency. Researchers prefer over-sampling and storing raw data to enable future reanalysis with improved algorithms. However, large-scale experiments generating terabytes daily require sophisticated data management strategies, including hierarchical storage systems that migrate older data to archival media.

📱 Modern Tools and Technologies

Contemporary data acquisition systems benefit from powerful tools that simplify optimization and management of sampling and storage parameters. Software frameworks provide high-level interfaces for configuring acquisition parameters, implementing compression, and managing storage resources.

Digital signal processors and field-programmable gate arrays enable sophisticated real-time processing at the edge, implementing adaptive sampling algorithms and compression with minimal latency. These hardware accelerators handle computationally intensive tasks that would overwhelm general-purpose processors, enabling more aggressive optimization strategies.

Machine learning algorithms increasingly contribute to intelligent data management. Anomaly detection models identify interesting events that warrant high-rate capture while allowing reduced sampling during normal operations. Predictive models forecast storage consumption patterns, enabling proactive capacity management.

🚀 Future Trends and Emerging Solutions

Technological evolution continues to shift the boundaries of what’s possible in optimizing sampling and storage trade-offs. Advances in storage technology steadily increase capacity while reducing costs, relaxing constraints that previously forced difficult compromises.

Computational storage devices integrate processing capabilities directly into storage media, enabling compression, encryption, and feature extraction to occur within the storage subsystem itself. This architecture reduces data movement, improves performance, and simplifies system design.

5G and next-generation wireless technologies provide bandwidth sufficient for streaming high-rate sensor data to remote processing and storage facilities. This connectivity enables thin edge devices with minimal local storage to support applications previously requiring substantial embedded capacity.

Quantum sensing technologies promise revolutionary improvements in measurement sensitivity and temporal resolution. However, they will also generate unprecedented data volumes, making optimization of sampling and storage even more critical for practical deployment.

🎓 Key Principles for Optimal Performance

Successful optimization requires balancing multiple competing factors while maintaining focus on application objectives. Several guiding principles help navigate this complexity.

Sample at rates justified by signal characteristics and analysis requirements, neither under-sampling critical components nor over-sampling unnecessarily. Remember that higher isn’t always better—excessive sampling wastes resources without improving results.

Design storage capacity with appropriate safety margins, accounting for worst-case scenarios and unexpected events. Running out of storage during critical operations can result in irreplaceable data loss.

Implement monitoring and alerting to track storage consumption and system health. Proactive management prevents surprise failures and enables timely intervention when conditions deviate from expectations.

Document configuration decisions and their rationale thoroughly. Future maintenance, troubleshooting, and system modifications benefit greatly from understanding why particular sampling rates and storage strategies were selected.

Validate optimizations through testing with representative data and operating conditions. Theoretical calculations provide guidance, but empirical verification ensures that real-world performance meets requirements.

💡 Maximizing Value from Every Sample

The ultimate goal of optimizing sampling rates and storage constraints isn’t simply to minimize costs or maximize data volumes—it’s to extract maximum insight and value from available resources. This requires thinking holistically about the entire data pipeline from sensor to analysis.

High-quality metadata enhances data value exponentially. Timestamps, configuration parameters, calibration information, and environmental conditions help contextualize measurements, enabling more sophisticated analysis and longer-term data utility. Allocating storage for comprehensive metadata pays dividends throughout the data lifecycle.

Retention policies should reflect data value over time. Recent high-resolution data supports immediate operational decisions, while historical data enables trend analysis and model development. Hierarchical storage strategies that progressively downsample or aggregate aging data optimize storage utilization while preserving long-term insights.

By carefully considering signal characteristics, selecting appropriate technologies, implementing intelligent sampling strategies, and employing effective data management practices, engineers can design systems that capture essential information reliably while respecting practical constraints. The perfect balance between sampling rate and storage isn’t a single point but rather a dynamic equilibrium that evolves with application needs, technological capabilities, and operational experience. Success comes from understanding the trade-offs, applying proven principles, and continuously refining the approach based on real-world performance.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.