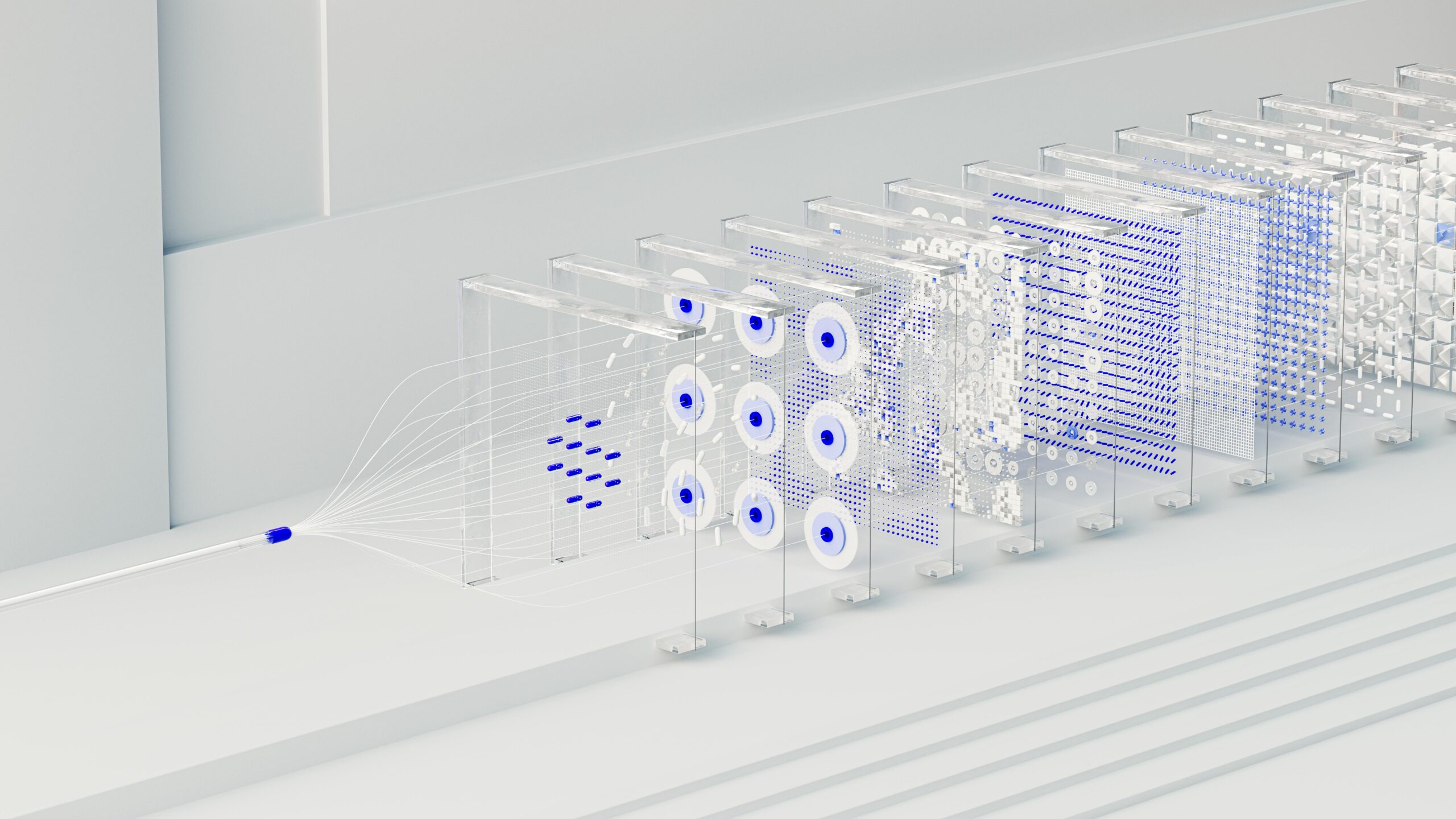

Multi-sensor fusion represents a transformative approach to data collection, combining acoustics, visual imagery, and climate measurements to unlock unprecedented analytical capabilities across industries.

🔬 The Revolution of Multi-Sensor Integration

In today’s data-driven world, single-source information no longer suffices for complex decision-making processes. Multi-sensor fusion technology has emerged as a game-changer, merging disparate data streams from acoustic sensors, camera systems, and climate monitors into cohesive, actionable intelligence. This convergence creates a synergistic effect where the combined output exceeds the sum of individual sensor capabilities.

Organizations across sectors are discovering that integrating multiple sensor types provides context-rich data that single modalities simply cannot deliver. When acoustic patterns, visual information, and environmental conditions converge, they paint a comprehensive picture of real-world scenarios that drive smarter decisions and more accurate predictions.

Understanding the Core Sensor Technologies

Acoustic Sensing: The Sound of Data 🎵

Acoustic sensors capture sound waves and vibrations that often reveal hidden patterns invisible to other detection methods. These devices range from simple microphones to sophisticated ultrasonic arrays capable of detecting frequencies beyond human hearing. In industrial settings, acoustic monitoring identifies equipment malfunctions before catastrophic failures occur, while in environmental applications, it tracks wildlife populations and ecosystem health.

Modern acoustic sensors employ advanced signal processing algorithms that filter background noise and isolate relevant audio signatures. Machine learning models trained on acoustic data can distinguish between normal operational sounds and anomalies requiring attention, providing early warning systems that save both time and resources.

Camera Systems: Visual Intelligence Redefined 📷

Visual sensors have evolved far beyond traditional photography. Today’s camera systems incorporate thermal imaging, multispectral analysis, and high-speed capture capabilities that document phenomena occurring too quickly or slowly for human perception. Computer vision algorithms process these visual streams in real-time, identifying objects, tracking movements, and detecting subtle changes that indicate developing situations.

The integration of artificial intelligence with camera technology has unlocked facial recognition, gesture interpretation, and automated quality inspection systems. When combined with other sensor modalities, visual data provides spatial context and verification that enhances overall system reliability.

Climate Sensors: Environmental Context Matters 🌡️

Climate and environmental sensors measure temperature, humidity, pressure, wind speed, air quality, and numerous other atmospheric parameters. These measurements provide essential context for interpreting data from other sensors. A camera might detect reduced visibility, but climate sensors confirm whether fog, dust, or pollution causes the condition. Acoustic signatures change with temperature and humidity, making environmental compensation crucial for accurate analysis.

Advanced climate monitoring systems now incorporate IoT connectivity, enabling distributed sensor networks that map environmental conditions across large geographical areas. This spatial awareness proves invaluable for applications ranging from precision agriculture to smart city management.

The Synergy Effect: Why Fusion Outperforms Individual Sensors

The true power of multi-sensor fusion emerges from the complementary strengths of different sensor types. Each modality has inherent limitations that others can compensate for, creating a robust system resilient to individual sensor failures or challenging environmental conditions.

Consider a security application: cameras excel during daylight but struggle in darkness, acoustic sensors detect sounds regardless of lighting but cannot provide spatial precision, and climate sensors identify environmental anomalies that might facilitate intrusion attempts. Together, these sensors create an integrated security ecosystem far more effective than any single technology.

Redundancy and Reliability

Multi-sensor systems inherently provide redundancy. When one sensor type encounters conditions that degrade performance, others continue functioning effectively. This redundancy transforms theoretical sensor failures from catastrophic events into manageable situations where alternative data sources maintain operational continuity.

Cross-validation between sensor types also enhances data reliability. When multiple independent sensors confirm the same phenomenon through different measurement principles, confidence in the observation increases dramatically. This validation proves particularly valuable in safety-critical applications where false positives or negatives carry significant consequences.

Implementation Strategies for Maximum Effectiveness

Architectural Considerations

Successful multi-sensor fusion requires thoughtful system architecture. Centralized approaches process all sensor data at a single location, simplifying coordination but potentially creating bottlenecks and single points of failure. Distributed architectures perform local processing at sensor nodes, reducing bandwidth requirements and improving response times, but demand more sophisticated synchronization mechanisms.

Edge computing has emerged as a compelling middle ground, performing preliminary processing near sensors while reserving complex analysis for centralized systems. This hybrid approach balances latency, bandwidth, and computational efficiency considerations.

Data Synchronization and Temporal Alignment ⏱️

Proper temporal alignment of data streams represents a critical technical challenge. Sensors operating at different sampling rates with varying latencies must be synchronized to ensure fusion algorithms compare data from the same moment. Timestamp protocols, hardware triggers, and sophisticated interpolation techniques help maintain temporal coherence across heterogeneous sensor arrays.

Network time protocol (NTP) and precision time protocol (PTP) provide standardized synchronization frameworks, though achieving microsecond-level precision often requires dedicated hardware support. The required synchronization accuracy depends on application specifics—surveillance systems might tolerate millisecond variations, while industrial monitoring may demand microsecond precision.

Fusion Algorithms: Turning Raw Data Into Insights

The algorithms that integrate multi-sensor data determine system effectiveness. Various approaches exist, each with specific strengths and computational requirements.

Low-Level Fusion Techniques

Low-level fusion combines raw sensor measurements before feature extraction. Kalman filters exemplify this approach, merging noisy measurements from multiple sources to produce optimal state estimates. These techniques excel when sensors measure the same physical quantity through different methods, allowing statistical combination that reduces uncertainty.

Bayesian networks provide probabilistic frameworks for low-level fusion, explicitly modeling uncertainty and sensor reliability. These methods gracefully handle missing data and sensor failures, maintaining system functionality even with degraded inputs.

Feature-Level Integration

Feature-level fusion extracts characteristics from individual sensor streams before combining them. Acoustic data might yield frequency spectra, camera feeds produce object detections, and climate sensors provide environmental profiles. Machine learning classifiers then process these combined features to make decisions or predictions.

This intermediate approach balances computational efficiency with information preservation, discarding irrelevant raw data while retaining meaningful patterns. Deep learning architectures particularly excel at feature-level fusion, automatically learning optimal feature combinations through training.

Decision-Level Fusion

Decision-level fusion allows each sensor system to independently analyze its data and reach conclusions before combining results. Voting schemes, Dempster-Shafer theory, and ensemble methods aggregate individual decisions into final outputs. This high-level approach offers modularity and computational efficiency, though potentially discarding valuable information lost during independent processing.

Real-World Applications Transforming Industries

Smart Agriculture: Precision Farming Revolution 🌾

Agricultural operations leverage multi-sensor fusion to optimize crop yields while minimizing resource consumption. Camera-equipped drones capture multispectral imagery revealing plant health, soil moisture sensors provide irrigation guidance, and weather stations forecast optimal treatment timing. Acoustic sensors even detect insect infestations before visible damage occurs.

This integrated approach enables precision agriculture where interventions target specific field areas rather than blanket applications, reducing chemical usage, water consumption, and environmental impact while improving productivity and profitability.

Industrial Predictive Maintenance

Manufacturing facilities employ sensor fusion for predictive maintenance that minimizes unplanned downtime. Vibration sensors detect mechanical imbalances, thermal cameras identify overheating components, and acoustic monitors recognize bearing failures. Environmental sensors confirm whether ambient conditions contribute to degradation.

Machine learning models trained on this fused data predict equipment failures days or weeks in advance, enabling scheduled maintenance during planned downtime rather than emergency repairs that halt production. The economic impact proves substantial—predictive maintenance reduces maintenance costs by 25-30% while increasing equipment uptime by 10-20% according to industry studies.

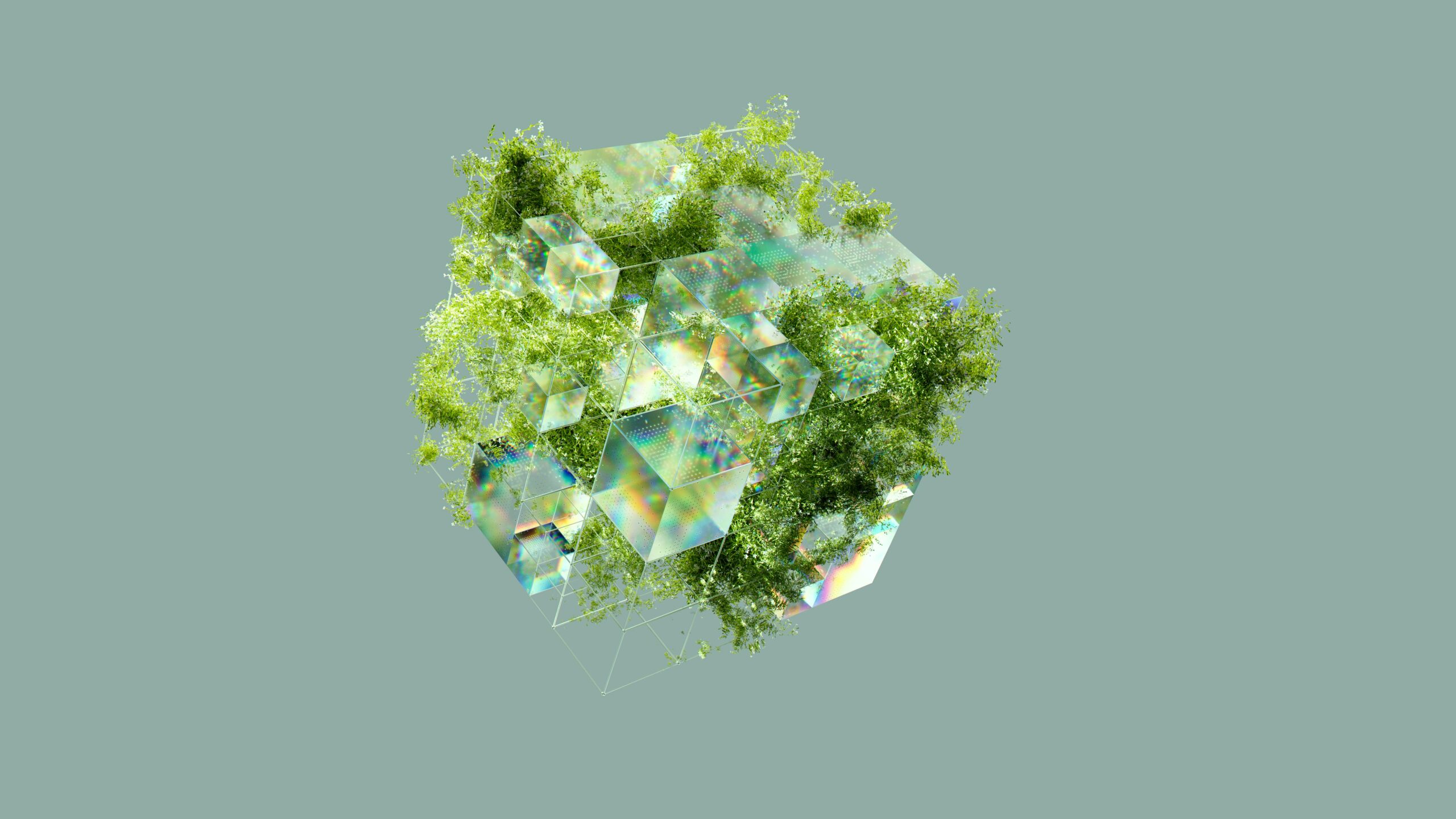

Environmental Monitoring and Conservation 🌍

Ecological research and conservation efforts benefit enormously from multi-sensor approaches. Camera traps document wildlife presence, acoustic recorders capture vocalizations for species identification, and climate stations track habitat conditions. This comprehensive monitoring reveals ecosystem dynamics, population trends, and environmental threats.

Marine environments particularly benefit from sensor fusion, where underwater cameras, hydrophones, and oceanographic instruments combine to study marine life, track migrations, and detect illegal fishing activities. The integration of these technologies has revolutionized our understanding of ocean ecosystems.

Smart Cities and Urban Planning

Urban environments increasingly rely on sensor fusion for traffic management, public safety, and environmental quality monitoring. Traffic cameras track vehicle flow, acoustic sensors measure noise pollution, and air quality monitors detect harmful emissions. This integrated data informs traffic signal optimization, identifies pollution hotspots, and guides urban planning decisions.

Smart city initiatives demonstrate how multi-sensor fusion scales from individual applications to city-wide systems, creating digital twins that mirror physical urban environments and enable predictive modeling of infrastructure changes before physical implementation.

Overcoming Implementation Challenges

Data Volume and Processing Requirements 💾

Multi-sensor systems generate massive data volumes requiring substantial storage and processing infrastructure. High-resolution cameras alone produce gigabytes per hour, and adding acoustic and climate data compounds the challenge. Cloud computing provides scalable solutions, though bandwidth costs and latency concerns limit certain applications.

Efficient data management strategies include intelligent compression, selective recording triggered by events rather than continuous capture, and hierarchical storage systems that retain recent data locally while archiving historical information remotely.

Calibration and Maintenance

Maintaining sensor accuracy over time demands regular calibration and maintenance protocols. Environmental exposure degrades sensor performance, requiring periodic verification against reference standards. Multi-sensor systems must coordinate calibration schedules to minimize operational disruption while ensuring data quality.

Automated health monitoring that detects sensor drift or failures enables proactive maintenance before data quality degrades. Self-calibration capabilities, where sensors cross-validate against each other, reduce manual calibration requirements.

Privacy and Security Considerations 🔒

Comprehensive sensing capabilities raise legitimate privacy concerns, particularly when cameras and microphones operate in public or semi-public spaces. Implementing privacy-preserving techniques like on-device processing, anonymization, and purpose-limited data retention addresses these concerns while maintaining system functionality.

Cybersecurity represents another critical consideration—networked sensor systems present attack surfaces that malicious actors might exploit. Encryption, authentication, and intrusion detection systems protect multi-sensor networks from unauthorized access and data manipulation.

The Future Landscape of Sensor Fusion Technology

Artificial Intelligence Integration

AI and machine learning continue revolutionizing sensor fusion capabilities. Deep learning models process raw multi-modal data end-to-end, automatically discovering optimal fusion strategies without manual feature engineering. Transfer learning allows models trained on large datasets to adapt quickly to new sensor configurations with minimal additional training.

Federated learning enables distributed sensor networks to collaboratively improve models while keeping raw data localized, addressing privacy concerns and bandwidth limitations. This approach proves particularly valuable for applications spanning multiple organizations or jurisdictions.

Miniaturization and Energy Efficiency

Technological advances steadily reduce sensor size and power consumption, enabling deployment in previously impractical scenarios. Micro-electromechanical systems (MEMS) integrate multiple sensor types on single chips, while energy harvesting technologies power sensors from ambient sources, eliminating battery replacement requirements.

These developments enable pervasive sensing ecosystems where thousands of low-cost, low-power nodes create dense monitoring networks that capture phenomena at unprecedented spatial and temporal resolution.

Standardization and Interoperability 🔄

Industry standardization efforts aim to improve interoperability between sensors from different manufacturers. Common data formats, communication protocols, and fusion frameworks reduce integration complexity and costs. Open-source sensor fusion platforms democratize access to sophisticated capabilities, accelerating innovation and adoption.

Standards organizations are developing reference architectures and best practices that guide implementation while leaving room for application-specific customization. This balance between standardization and flexibility proves essential for technology maturation.

Building Your Multi-Sensor Fusion Strategy

Organizations considering multi-sensor fusion should begin with clear objectives defining what insights they seek and how these insights drive value. Starting with pilot projects limited in scope allows teams to gain experience before larger deployments. Choosing appropriate sensors requires balancing performance requirements, environmental conditions, budget constraints, and maintenance capabilities.

Partnering with experienced technology providers accelerates implementation and reduces technical risks. Comprehensive training ensures staff can effectively operate and maintain systems, maximizing return on investment. Establishing data governance frameworks before deployment addresses privacy, security, and compliance requirements proactively.

Measuring Success and Continuous Improvement

Defining key performance indicators enables objective assessment of multi-sensor fusion system effectiveness. Metrics might include detection accuracy, false alarm rates, response times, system uptime, and cost savings compared to previous approaches. Regular performance reviews identify optimization opportunities and guide system evolution.

User feedback provides qualitative insights complementing quantitative metrics. Operators interacting daily with systems often identify usability improvements and additional capabilities that enhance practical value. Iterative refinement based on operational experience transforms initial deployments into mature, highly effective systems.

Embracing the Multi-Sensor Future 🚀

Multi-sensor fusion represents more than incremental improvement over single-sensor approaches—it fundamentally transforms our ability to understand and interact with the physical world. As technologies mature and costs decline, these systems will become ubiquitous across industries, enabling applications we have yet to imagine.

Organizations that master multi-sensor integration position themselves at the forefront of the data revolution, gaining competitive advantages through superior situational awareness and decision-making capabilities. The convergence of acoustics, visual sensing, and climate monitoring creates unprecedented opportunities for innovation and value creation.

The journey toward comprehensive sensor fusion requires technical expertise, strategic vision, and organizational commitment. Those who embrace this challenge will unlock data insights that redefine what is possible, transforming operations, enhancing safety, and creating sustainable competitive advantages in an increasingly complex world.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.