Sensor-triggered detection systems are powerful tools, but their effectiveness hinges on minimizing false positives while maintaining reliable threat identification across diverse operational environments.

🎯 Understanding the False Positive Challenge in Sensor Technology

False positives represent one of the most significant challenges in modern sensor-triggered detection systems. When a sensor incorrectly identifies a benign event as a threat or target condition, it creates what we call a false positive. These erroneous detections can overwhelm security teams, waste resources, and ultimately lead to alert fatigue where genuine threats might be overlooked.

The impact of false positives extends beyond mere inconvenience. In industrial settings, unnecessary shutdowns triggered by faulty sensor readings can cost thousands of dollars per hour. In security applications, frequent false alarms can desensitize personnel to legitimate threats. Understanding the root causes of these false detections is the first step toward developing effective mitigation strategies.

Modern sensor systems operate in increasingly complex environments where multiple variables can trigger unwanted responses. Environmental factors, equipment degradation, improper calibration, and interference from other systems all contribute to the false positive problem. Addressing these issues requires a multifaceted approach that combines technology, methodology, and ongoing optimization.

⚙️ Calibration Excellence: The Foundation of Accurate Detection

Proper sensor calibration serves as the cornerstone of any strategy to minimize false positives. Sensors that drift from their baseline specifications will inevitably produce unreliable readings. Establishing a rigorous calibration schedule based on manufacturer recommendations and operational experience ensures sensors maintain their intended accuracy levels.

The calibration process should be documented meticulously, creating a historical record that can reveal patterns and predict when sensors are likely to require adjustment. This data-driven approach transforms calibration from a reactive maintenance task into a proactive accuracy enhancement strategy.

Advanced calibration techniques now incorporate automated verification systems that continuously monitor sensor performance against known standards. These systems can detect subtle drifts in sensor behavior before they result in false positives, enabling preemptive corrections that maintain system integrity.

Implementing Multi-Point Calibration Protocols

Single-point calibration may suffice for basic applications, but complex detection scenarios demand multi-point calibration strategies. By verifying sensor accuracy across the entire operational range, you create a comprehensive performance profile that identifies non-linear responses and edge cases where false positives are most likely to occur.

Environmental compensation should be integrated into calibration protocols. Temperature, humidity, pressure, and other ambient conditions can significantly affect sensor behavior. Modern calibration approaches account for these variables, either through mathematical compensation algorithms or by establishing separate calibration profiles for different environmental conditions.

🔍 Threshold Optimization: Finding the Sweet Spot

Detection thresholds represent the dividing line between ignored signals and triggered alerts. Setting these thresholds too low generates excessive false positives, while overly conservative thresholds create false negatives where genuine events go undetected. The optimization challenge lies in finding the balance point that maximizes true positive detection while minimizing false alarms.

Static thresholds often prove inadequate in dynamic environments where baseline conditions fluctuate throughout the day or across seasons. Adaptive threshold systems that adjust based on recent historical data provide superior performance by accounting for legitimate environmental variations that would otherwise trigger false positives.

Statistical analysis of sensor data over extended periods reveals patterns that inform intelligent threshold setting. By analyzing the distribution of sensor readings during confirmed non-event periods, you can establish thresholds that fall outside normal variation while remaining sensitive to genuine anomalies.

Dynamic Threshold Adjustment Strategies

Machine learning algorithms have revolutionized threshold optimization by continuously analyzing sensor patterns and automatically adjusting detection parameters. These systems learn what constitutes normal behavior for each specific deployment environment, establishing personalized baselines that reduce false positives without compromising detection sensitivity.

Time-based threshold modulation recognizes that different times of day or operational cycles produce different baseline sensor readings. A motion detection system, for example, might employ more sensitive thresholds during overnight hours when activity is expected to be minimal, while raising thresholds during busy daytime periods to filter out routine movements.

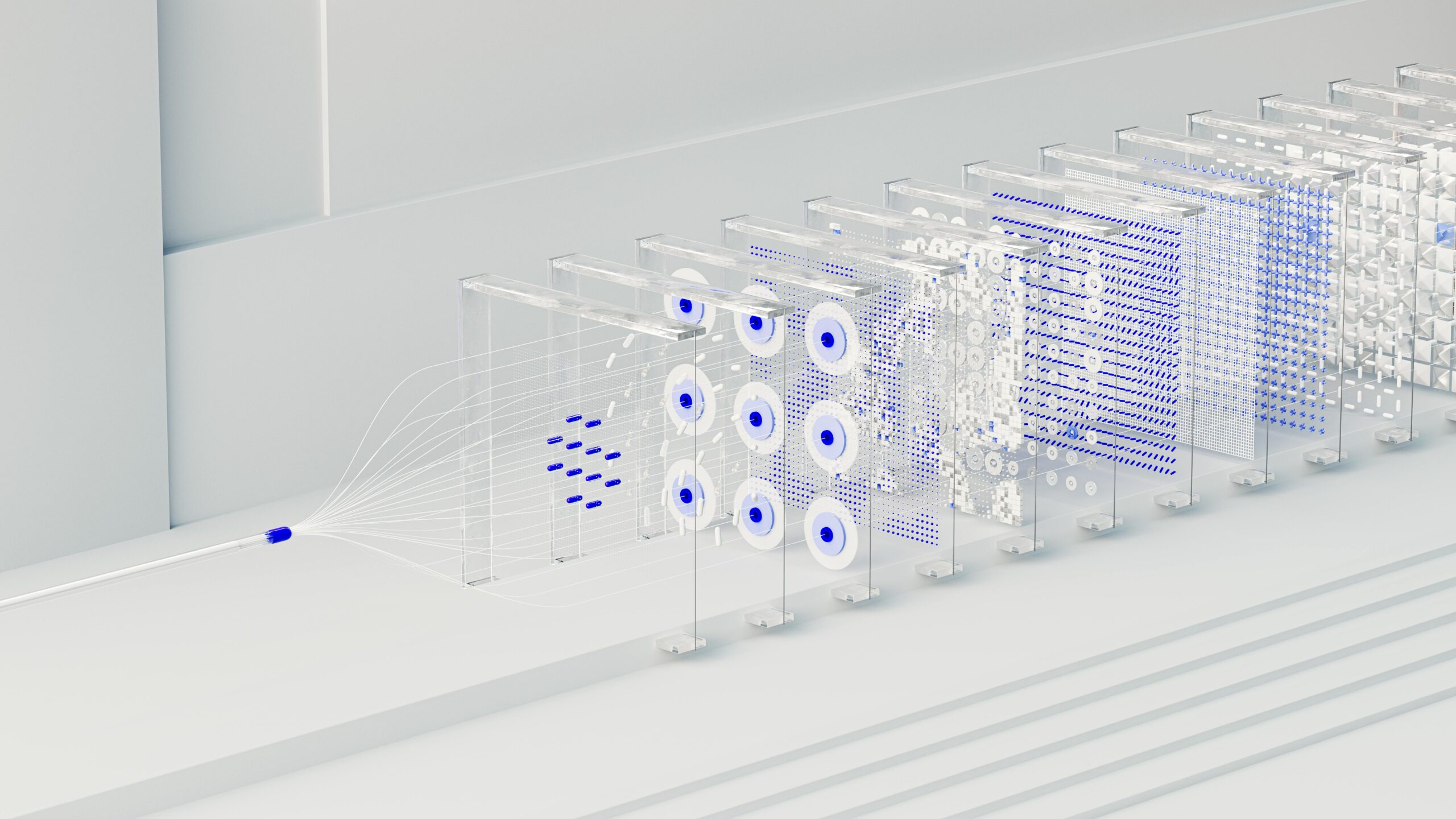

📊 Multi-Sensor Fusion: Confirming Before Alerting

Relying on a single sensor type creates vulnerability to the specific weaknesses and interference patterns that affect that technology. Multi-sensor fusion strategies require confirmation from multiple independent sensor modalities before triggering an alert, dramatically reducing false positives while increasing detection confidence.

A comprehensive detection system might combine passive infrared motion sensors with microwave radar and video analytics. Each technology has distinct characteristics and vulnerabilities, but their combination creates a robust detection framework where the weaknesses of one sensor are compensated by the strengths of others.

The fusion logic determines how multiple sensor inputs are combined to generate detection decisions. Simple AND logic requires all sensors to agree before triggering an alert, maximizing false positive reduction but potentially creating false negatives. More sophisticated weighted voting systems assign confidence scores to each sensor based on reliability and relevance to the specific detection scenario.

Implementing Effective Sensor Correlation

Temporal correlation ensures that multiple sensor triggers occur within a defined time window, confirming that they’re responding to the same event rather than unrelated phenomena. Spatial correlation verifies that sensors in logical proximity are detecting consistent patterns, further validating the authenticity of the detection.

Contextual awareness enhances sensor fusion by incorporating additional environmental data. Weather conditions, scheduled activities, and system status information all provide context that helps distinguish genuine threats from benign events that might otherwise appear suspicious based solely on sensor readings.

🛠️ Environmental Factors and Interference Management

Environmental conditions represent a major source of false positives in sensor-triggered systems. Wind-blown vegetation, small animals, insects, weather phenomena, and changing light conditions can all trigger detection systems designed to identify human activity or equipment malfunctions.

Physical site preparation significantly impacts false positive rates. Trimming vegetation away from motion sensor fields of view, installing sensors at appropriate heights to minimize ground clutter, and positioning equipment to avoid direct sunlight or reflective surfaces all contribute to reduced false alarm rates.

Electromagnetic interference from nearby equipment, power lines, radio transmitters, and other electronic systems can corrupt sensor signals and create spurious detections. Proper shielding, grounding, and physical separation from interference sources protect signal integrity and reduce electronically-induced false positives.

Weather-Related False Positive Mitigation

Weather conditions pose unique challenges for outdoor sensor deployments. Rain, snow, fog, and extreme temperatures all affect sensor performance in ways that can generate false positives. Advanced systems incorporate weather awareness, either through integration with meteorological data sources or dedicated weather sensors that modify detection algorithms based on current conditions.

Precipitation can trigger radar sensors, create thermal signatures that activate infrared detectors, and generate acoustic signals that register on vibration sensors. Weather-compensated detection algorithms recognize these characteristic patterns and suppress alerts that match weather-related signatures while remaining responsive to genuine detection events.

💻 Signal Processing and Filtering Techniques

Raw sensor signals often contain noise, artifacts, and transient anomalies that don’t represent genuine detection events. Advanced signal processing techniques extract meaningful patterns from noisy data, dramatically improving the signal-to-noise ratio and reducing false positive rates.

Digital filtering removes frequency components that fall outside the expected signature of target events. A vibration sensor monitoring for equipment failures, for example, might filter out low-frequency building movements and high-frequency electrical noise, focusing only on the frequency band where mechanical problems manifest.

Pattern recognition algorithms analyze signal characteristics beyond simple threshold crossings. Shape analysis, duration filters, rise-time evaluation, and frequency content examination all help distinguish genuine detection events from the noise and interference that produce false positives.

Advanced Digital Signal Processing Approaches

Wavelet analysis decomposes signals into time-frequency components, revealing patterns that aren’t apparent in traditional time-domain or frequency-domain analysis alone. This powerful technique excels at identifying transient events and distinguishing them from steady-state noise sources.

Adaptive filtering systems learn the characteristics of noise and interference specific to each installation environment. By modeling and predicting these unwanted signal components, adaptive filters can subtract them from sensor readings, leaving only the meaningful information that represents genuine detection events.

📱 Machine Learning and Artificial Intelligence Integration

Machine learning has transformed false positive reduction by enabling systems to learn from experience rather than relying solely on predetermined rules. Neural networks trained on historical data develop sophisticated pattern recognition capabilities that exceed traditional algorithmic approaches.

Supervised learning requires labeled training data where true positives and false positives are identified. The system learns the subtle differences between these categories, developing classification models that generalize to new situations. As the system encounters more examples, its accuracy continues to improve through ongoing training.

Unsupervised learning identifies patterns and anomalies without requiring pre-labeled data. These approaches excel at detecting unusual events that don’t fit established patterns, while clustering analysis groups similar events together, helping identify recurring false positive sources that can be systematically addressed.

Deep Learning for Complex Pattern Recognition

Convolutional neural networks have revolutionized video analytics by learning hierarchical features that distinguish genuine security threats from innocent activities. These systems can differentiate between a person approaching a protected area versus windblown debris or animal movement, decisions that previously generated high false positive rates.

Recurrent neural networks analyze temporal sequences, understanding how events evolve over time. This capability proves invaluable for distinguishing brief transient interference from sustained events that represent genuine detection conditions, reducing false positives caused by momentary signal glitches.

🔧 Maintenance and System Health Monitoring

Degraded or failing sensors generate increased false positive rates as their performance deteriorates. Proactive maintenance programs that identify and address equipment problems before they significantly impact accuracy are essential components of comprehensive false positive reduction strategies.

Continuous system health monitoring tracks key performance indicators that reveal developing problems. Changes in baseline noise levels, increased detection frequency, or unusual signal patterns all provide early warning of equipment degradation that could lead to false positive problems.

Predictive maintenance leverages historical performance data to forecast when sensors are likely to require service. Rather than relying on fixed maintenance schedules or reactive responses to failures, predictive approaches optimize maintenance timing to prevent false positive problems before they occur.

Establishing Effective Performance Metrics

Quantitative performance tracking provides objective assessment of false positive rates and detection accuracy. Metrics such as false alarm rate, detection probability, receiver operating characteristic curves, and mean time between false alarms enable data-driven optimization and demonstrate system performance improvements.

Benchmarking against similar installations or industry standards identifies opportunities for improvement and validates that your system performs within acceptable parameters. Regular performance reviews ensure that false positive reduction remains an ongoing priority rather than a one-time optimization effort.

🎓 Training and Human Factors Considerations

Even the most sophisticated sensor systems require human intervention for final decision-making and response. Operator training significantly impacts false positive rates by ensuring personnel correctly interpret sensor outputs and understand system limitations.

Alert fatigue represents a serious concern when false positive rates remain high. Operators bombarded with frequent false alarms become desensitized and may dismiss or delay response to genuine threats. Minimizing false positives directly improves operator effectiveness and system reliability.

User feedback loops enable continuous improvement by capturing operator assessments of detection events. When operators classify alerts as true or false positives, this information feeds back into system optimization, refining algorithms and thresholds based on real-world operational experience.

🚀 Emerging Technologies and Future Directions

Quantum sensors promise unprecedented sensitivity and precision, potentially revolutionizing detection capabilities while introducing new challenges for false positive management. These emerging technologies will require novel approaches to threshold optimization and signal processing.

Edge computing enables sophisticated processing directly at the sensor level rather than centralizing analysis. This distributed architecture reduces latency, enables more complex real-time algorithms, and allows multi-sensor fusion at the point of detection for improved false positive reduction.

Digital twin technology creates virtual models of sensor systems that enable testing and optimization in simulated environments before deploying changes to operational systems. This approach accelerates improvement cycles while minimizing risks associated with parameter adjustments.

✅ Implementing a Comprehensive False Positive Reduction Strategy

Effective false positive minimization requires integrated strategies that address all contributing factors simultaneously. Begin with fundamental elements like proper installation, calibration, and maintenance, then layer advanced techniques such as multi-sensor fusion and machine learning to achieve optimal performance.

Continuous monitoring and iterative improvement ensure that false positive reduction remains an ongoing priority. Regular performance assessments identify new optimization opportunities and verify that implemented strategies deliver sustained benefits rather than temporary improvements.

The investment in false positive reduction pays dividends through improved operational efficiency, enhanced security effectiveness, reduced operator fatigue, and increased confidence in automated detection systems. As sensor technologies continue to advance and deployment environments become more complex, the strategies outlined here provide a roadmap for maintaining accuracy and reliability in the face of evolving challenges.

Toni Santos is a bioacoustic researcher and conservation technologist specializing in the study of animal communication systems, acoustic monitoring infrastructures, and the sonic landscapes embedded in natural ecosystems. Through an interdisciplinary and sensor-focused lens, Toni investigates how wildlife encodes behavior, territory, and survival into the acoustic world — across species, habitats, and conservation challenges. His work is grounded in a fascination with animals not only as lifeforms, but as carriers of acoustic meaning. From endangered vocalizations to soundscape ecology and bioacoustic signal patterns, Toni uncovers the technological and analytical tools through which researchers preserve their understanding of the acoustic unknown. With a background in applied bioacoustics and conservation monitoring, Toni blends signal analysis with field-based research to reveal how sounds are used to track presence, monitor populations, and decode ecological knowledge. As the creative mind behind Nuvtrox, Toni curates indexed communication datasets, sensor-based monitoring studies, and acoustic interpretations that revive the deep ecological ties between fauna, soundscapes, and conservation science. His work is a tribute to: The archived vocal diversity of Animal Communication Indexing The tracked movements of Applied Bioacoustics Tracking The ecological richness of Conservation Soundscapes The layered detection networks of Sensor-based Monitoring Whether you're a bioacoustic analyst, conservation researcher, or curious explorer of acoustic ecology, Toni invites you to explore the hidden signals of wildlife communication — one call, one sensor, one soundscape at a time.